Every device, application and service needs an IP address to connect. As enterprises expand across data centers, branch offices and multiple clouds, managing IP addresses becomes increasingly complex. Without a unified system, NetOps and CloudOps teams often rely on spreadsheets, separate tools and ticketing systems to allocate and track IPs. The result? Limited visibility, wasted resources and the constant risk of IP conflicts.

Infoblox Universal IP Address Management™, part of Infoblox Universal DDI™ Product Suite, automates IP address management (IPAM), centralizes visibility and delivers unmatched features for policy-driven IP address allocation across hybrid, multi-cloud networks. Universal IPAM integrates with Google’s internal range to automate the management of internal range resources and enforce IPAM policies on Google Cloud resources.

What Makes IPAM Challenging in Dynamic Networks

Managing IP addresses across distributed networks effectively is critical to ensure seamless connectivity. Google’s internal range allows enterprises to reserve IP address blocks for virtual private cloud (VPC) resources in Google Cloud. However, managing IP addresses in a dynamic network environment can get overwhelming when there are frequent changes in IP addresses across on-premises and hybrid cloud networks. This dynamic setup brings its own set of challenges:

- Overlapping IP Addresses: With disparate systems, there is an increased risk of assigning the same IP address blocks to two different networks, leading to overlapping IPs, routing issues and outages.

- Operational Siloes: NetOps and CloudOps teams work in siloes and use different tools, from on-premises IPAM tools to cloud-based IPAM solutions.

- IP Address Exhaustion: Another challenge is the exhaustion of available IP addresses, especially within Google Kubernetes Engine (GKE) clusters. These clusters use default IP allocations that are often excessively large, leading to the swift depletion of private IP address pools if not carefully managed.

Infoblox has partnered with Google Cloud to address these challenges.

A Smarter Way to Manage IP Addresses with Infoblox and Google Cloud

The internal range in Google Cloud is integrated into the Infoblox Universal IPAM solution, enabling efficient management of IP addresses for Google Cloud networks at scale. Infoblox can discover Google Virtual Private Cloud (VPC) networks and IP addresses across Google Cloud environments. The new integration simplifies and automates the management of internal range resources and enforces IPAM policies on Google Cloud resources to avoid overlapping IP addresses.

The integration enables enterprises to:

- Create and reserve internal ranges in Google Cloud resources through the Infoblox Portal, providing centralized control to NetOps.

- Allow users to view all the Google internal range resources in the Infoblox Portal to enrich visibility.

- Discover VPC peering relationships and show how internal ranges are associated with VPCs and subnets.

- Plan your networks and make sure IP address blocks don’t overlap between headquarters, branch offices, public clouds, like Google Cloud, and other locations.

Use Cases in Detail

Use Case 1: Plan and Assign IP Address Blocks in Universal IPAM

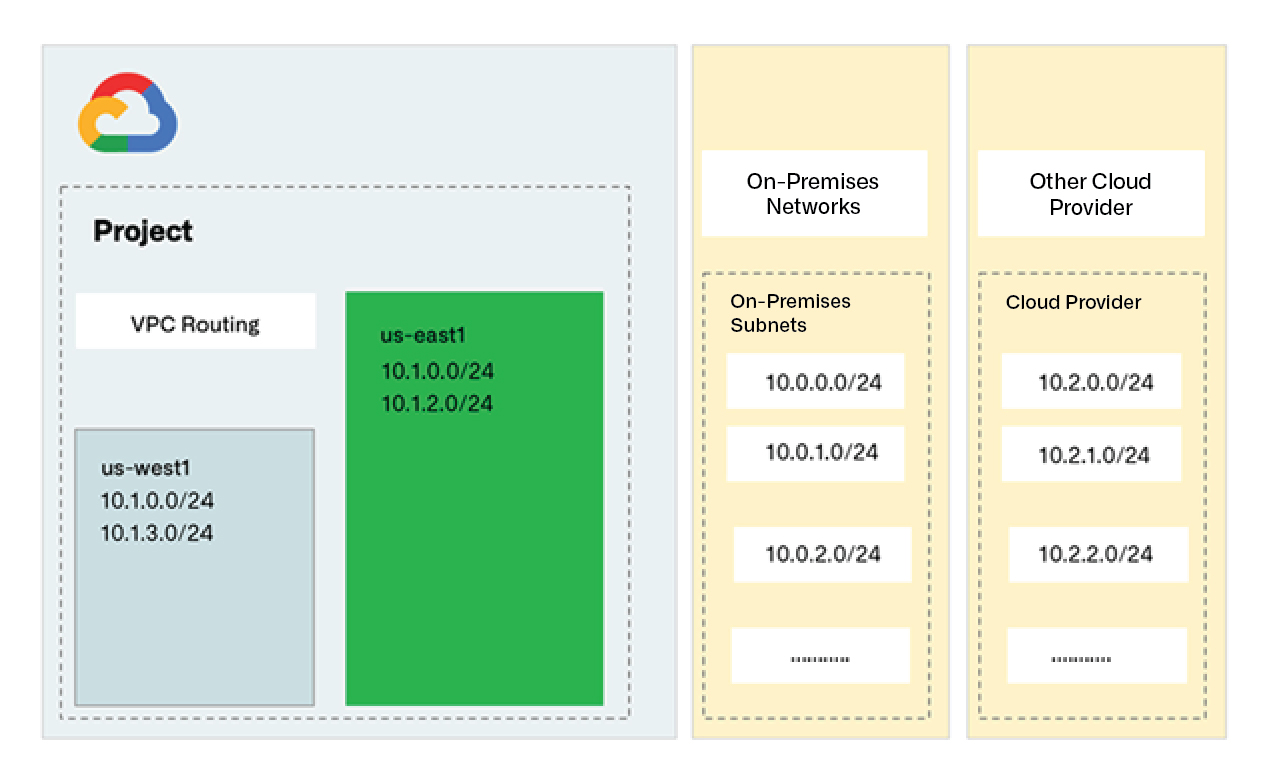

As enterprises scale, multiple teams create overlapping addresses across on-premises, Google Cloud and other providers, resulting in IP conflicts and service disruptions. From Universal IPAM, NetOps teams can centrally plan, reserve and delegate IP address spaces across hybrid networks. Internal ranges for Google Cloud VPC networks and subnets can be defined directly within Infoblox Portal. This eliminates IP address exhaustion, streamlines provisioning and supports predictive capacity planning—so teams always know where IP address space is available before deploying new workloads.

Figure 1. Assign IP address blocks in Universal IPAM

Use Case 2: Reserve IP Address Blocks for On-Premises and Other Cloud Providers in Google Cloud

Enterprises increasingly connect on-premises networks with Google Cloud and other public clouds. Without coordinated IP management, hybrid connectivity can easily introduce overlapping IP spaces that break communication between environments. Universal IPAM integration with Google internal range solves this by allowing teams to reserve address blocks assigned to on-premises and other cloud providers using “EXTERNAL_TO_VPC” and “NOT_SHARED” attributes in Google Cloud. Thus, it prevents reusing assigned address blocks, ensuring conflict-free hybrid expansion.

Figure 2. Protect on-premises and external IP address blocks in Google Cloud

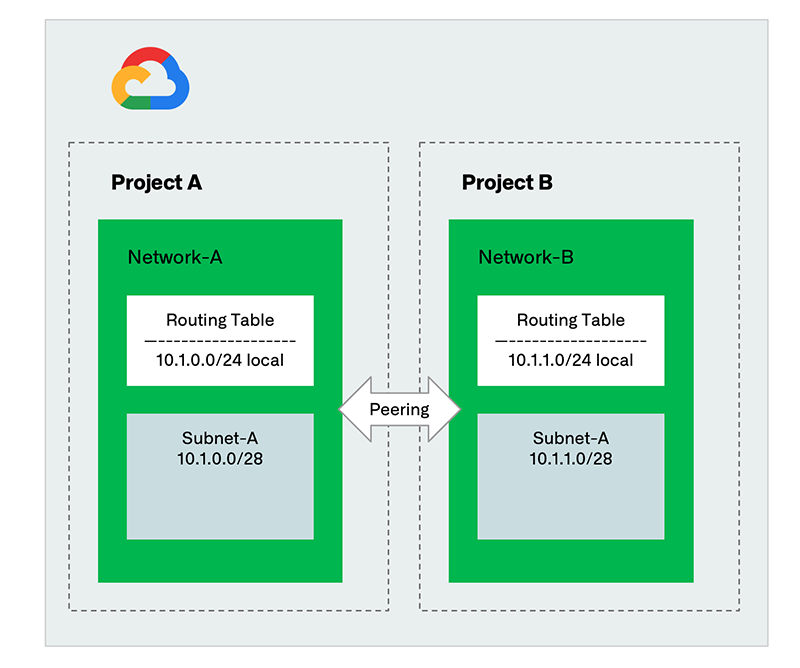

Use Case 3: Get Visibility into VPC Peering and Subnet Relationships

As Google Cloud environments grow, maintaining visibility into VPC peering connections, subnets and internal ranges becomes critical for both planning and troubleshooting. With the integration, Universal IPAM discovers VPC peering relationships, seeing exactly how internal ranges map to specific projects and subnets. This visibility prevents overlapping IP addresses and better collaboration between NetOps and CloudOps teams.

Figure 3. Discover VPC peering and subnet relationships in Universal IPAM

Conclusion

The Infoblox Universal IPAM and Google Cloud internal range integration empowers enterprises to take control of IPAM across on-premises and Google Cloud environments. It eliminates the time-consuming and error-prone manual coordination between NetOps and Cloud Ops teams. Enterprises benefit from:

- Unified IP Address Management: Manage your IP addresses from a single console across hybrid networks.

- Policy Enforcement: Enforce IPAM policies on Google Cloud and avoid overlapping IPs across hybrid networks.

- Enhanced Visibility: Gain visibility into existing VPCs, subnets and VPC network peering across Google Cloud environments.

Learn More

- See a quick video of how Infoblox Universal IPAM integration with Google internal range helps your teams operate faster and smarter.

- Read the solution note to learn how to unify and simplify IPAM across hybrid, multi-cloud networks.