Authors: Krupa Srivatsan and Bart Lenaerts

How adversaries innovate with GenAI and the case for predictive intelligence

INTRO

Generative AI, particularly Large Language Models (LLM), is enforcing a transformation in cybersecurity. Adversaries are attracted to GenAI as it lowers entry barriers to create deceiving content. Actors do this to enhance the efficacy of their intrusion techniques like social engineering and detection evasion.

This blog provides common examples of malicious GenAI usage like deepfakes, chatbot automation and code obfuscation. More importantly, it also makes a case for early warnings of threat activity and usage of predictive threat intelligence capable of disrupting actors before they execute their attacks.

Example 1: Deepfake scams using voice cloning

At the end of 2024, the FBI warned that criminals were using generative AI to commit fraud on a larger scale, making their schemes more believable. GenAI tools like voice cloning reduce the time and effort needed to deceive targets with trustworthy audio messages. Voice cloning tools can even correct human errors like foreign accents or vocabulary that might otherwise signal fraud. While creating synthetic content isn’t illegal, it can facilitate crimes like fraud and extortion. Criminals use AI-generated text, images, audio, and videos to enhance social engineering, phishing, and financial fraud schemes.

Especially worrying is the easy access cybercriminals have to these tools and the lack of security safeguards. A recent Consumer Reports investigation2 on six leading publicly available AI voice cloning tools discovered that five have bypassable safeguards, making it easy to clone a person’s voice even without their consent.

Voice cloning technology works by taking an audio sample of a person speaking and then extrapolating that person’s voice into a synthetic audio file. However, without safeguards in place, anyone who registers an account can simply upload audio of an individual speaking, such as from a TikTok or YouTube video, and have the service imitate them.

Voice cloning has been utilized by actors in various scenarios, including large-scale deep-fake videos for cryptocurrency scams or the imitation of voices during individual phone calls. A recent example that garnered media attention is the so-called “grandparent” scams3, where a family emergency scheme is used to persuade the victim to transfer funds.

Example 2: AI-powered chat boxes

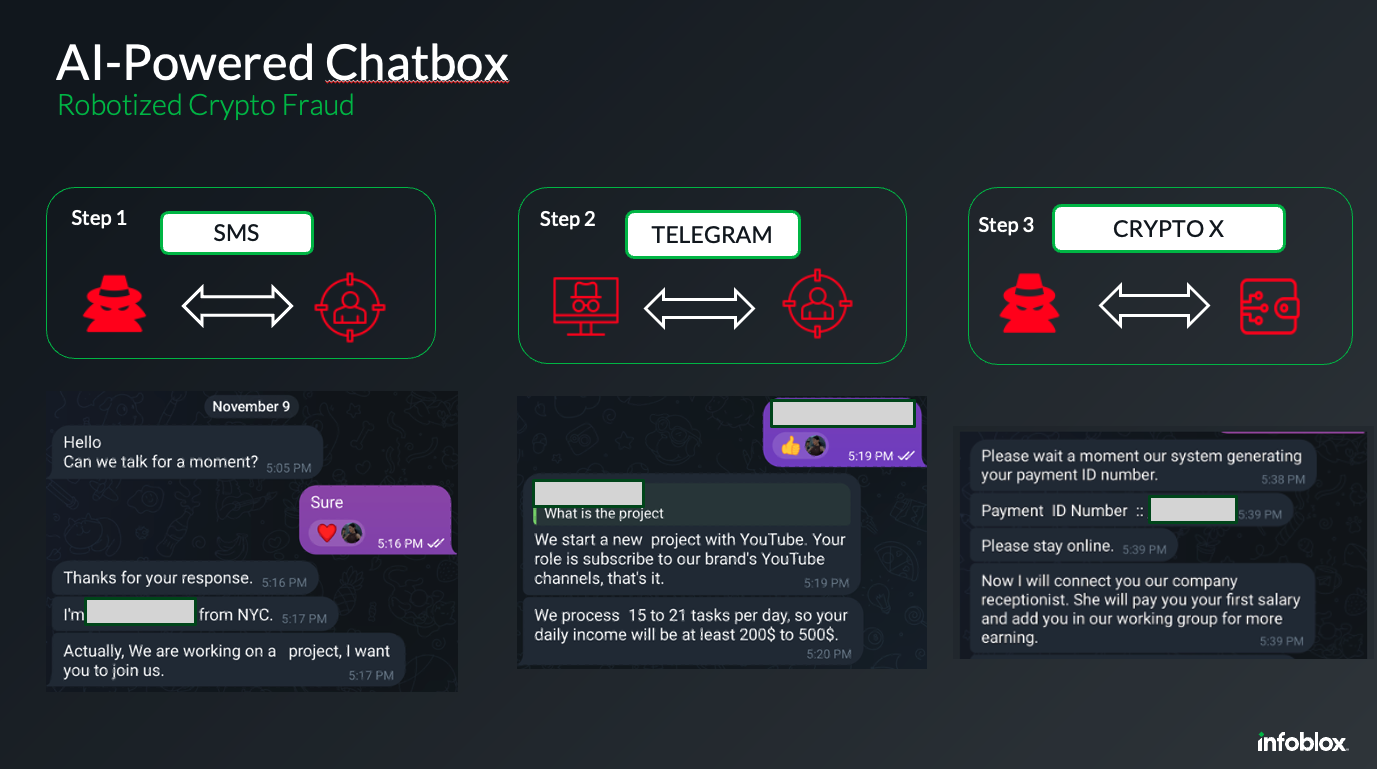

Actors often pick their victims carefully by gathering insights on their interests and set them up for scams. Initial research is used to craft the smishing message and trigger the victim into a conversation with them. Personal notes like “I read your last social post and wanted to become friends” or “Can we talk for a moment?” are some examples our intel team discovered (step 1 in picture 2). While some of these messages may be extended with AI-modified pictures, what matters is that actors invite their victims to the next step, which is a conversation on Telegram or another actor controlled medium, far away from security controls (step 2 in picture 2).

Picture 2: Sample AI-driven conversation

Once the victim is on the new medium, the actor uses several tactics to continue the conversation, such as invites to local golf tournaments, Instagram following or AI-generated images. These AI bot-driven conversations go on for weeks and include additional steps, like asking for a thumbs-up on YouTube or even a social media repost. At this moment, the actor is trying to assess their victims and see how they respond. Sooner or later, the actor will show some goodwill and create a fake account. Each time the victim reacts positively to the actor’s request, the amount of currency in the fake account will increase. Later, the actor may even request small amounts of investment money, with an ROI of more than 25 percent. When the victim asks to collect their gains (step 3 in picture 2), the actor requests access to the victim’s crypto account and exploits all established trust. At this moment, the scamming comes to an end and the actor steals the crypto money in the account.

While these conversations are time-intensive, they are rewarding for the scammer and can lead to ten-thousands of dollars in ill-gotten gains. By using AI-driven chat boxes, actors have found a productive way to automate the interactions and increase the efficiency of their efforts.

InfoBlox Threat Intel tracks these scams to optimize threat intelligence production.

Common characteristics found in malicious chat boxes include:

- AI grammar errors, such as an extra space after a period, referencing foreign languages

- Using vocabulary that includes fraud-related terms

- Forgetting details from past conversations

- Repeating messages mechanically due to poorly trained AI chatbots (also known as parroting)

- Making illogical requests, like asking if you want to withdraw your funds at irrational moments in the conversation

- Using false press releases posted on malicious sites

- Opening conversations with commonly used phrases to lure the victim

- Using specific cryptocurrency types used often in criminal communities

The combinations of these fingerprints allow threat intel researchers to observe emerging campaigns, track back the actors and their malicious infrastructure.

Example 3: Code obfuscation and evasion

Threat actors are using GenAI not only for creating human readable content. Several news outlets explored how GenAI assists actors in obfuscating their malicious codes. Earlier this year Infosecurity Magazine3 published details of how threat researchers at HP Wolf discovered social engineering campaigns spreading VIP Keylogger and 0bj3ctivityStealer malware, both of which involved malicious code being embedded in image files. With a goal to improve the efficiency of their campaign, actors are repurposing and stitching together existing malware via GenAI to evade detection. This approach also assists them in gaining velocity in setting up threat campaigns and reducing the skills needed to construct infection chains. Industry threat research HP Wolf estimates evasion increments of 11% for email threats while other security vendors like Palo Alto Networks estimate4 that GenAI flipped their own malware classifier model’s verdicts 88% of the time into false negatives. Threat actors are clearly making progress in their AI driven evasion efforts.

Making the case for modernizing threat research

As AI driven attacks pose plenty of detection evasion challenges, defenders need to look beyond traditional tools like sandboxing or indicators derived from incident forensics to produce effective threat intelligence. One of these opportunities can be found by tracking pre-attack activities instead of sending the last suspicious payload to a slow sandbox.

Just like your standard software development lifecycle, threat actors go through multiple stages before launching attacks. First, they develop or generate new variants for the malicious code using GenAI. Next, they set up the infrastructure like email delivery networks or hard to trace traffic distribution systems. Often this happens in combination with domain registrations or worse hijacking of existing domains.

Finally, the attacks go into “production” meaning the domains become weaponized, ready to deliver malicious payload. This is the stage where traditional security tools attempt to detect and stop threats because it involves easily accessible endpoints or networks egress points within the customer’s environment. Because of evasion and deception by GenAI tools, this point of detection may not be effective as the actors continuously alter their payloads or mimic trustworthy sources.

Predictive Intelligence based on DNS Telemetry

At Infoblox, finding actors and their malicious infrastructure before they attack is at the core of our team’s mission. Starting from a singular domain registration combined with worldwide DNS telemetry and decades of threat expertise, Infoblox Threat Intel leverages cutting-edge data science to identify even the stealthiest actors. Some of these – like Vextrio Viper – not only execute attacks but enable thousands of affiliates to deliver otherwise seemingly unrelated content to the most vulnerable victims.

Infoblox threat researchers intercept actor activities at the early stages of the attack as new malicious infrastructure is configured. By using information like new domain registrations, DNS records and query resolutions, Infoblox leverages data that is NOT prone to GenAI alteration. Why? Because DNS Data is transparent to multiple stakeholders (Domain Owner, Registrar, Domain Server, Client, Destination) and needs to be 100% correct to make the connection work. Simply said, DNS protocol is an essential component of the internet that is hard to fool and ideal suited for research.

DNS analytics has another advantage; domains and malicious DNS infrastructures are often configured in advance of a threat campaign or individual attack. As new threat intelligence is created based on these domain changes, our experts also monitor usage of discovered malicious domains by clients. This is done to track the quality of the produced intel. Results have been spectacular. In 2024, Infoblox Threat Intel achieved a “Protection Before Engagement” of 77.1%. Indeed, Infoblox Threat Intel was able to identify more than ¾ of all discovered malicious domains BEFORE any interaction to the domain happened. This metric not only demonstrates the quality of Infoblox Threat Intel, but it is also one of the only true predictive intelligence metrics in the industry. It is important to highlight that in 2024, the false positive rate remained at 0.0011%.

Conclusion

The evolving landscape of AI and the impact on security is significant. With the right approaches and strategies, such as predictive intelligence derived from DNS, organizations can truly get ahead of GenAI risks and ensure that they don’t become patient zero.

To learn more about Infoblox Threat Intelligence Research visit

https://www.infoblox.com/threat-intel/

Request DNS Security Workshop

https://info.infoblox.com/sec-ensecurityworkshop-20240901-registration.html

Footnotes

- https://www.ic3.gov/PSA/2024/PSA241203

- https://www.nbcnews.com/tech/security/ai-voice-cloning-software-flimsy-guardrails-report-finds-rcna195131

- https://www.cbc.ca/news/marketplace/marketplace-ai-voice-scam-1.7486437

- https://www.infosecurity-magazine.com/news/hackers-image-malware-genai-evade/

- https://thehackernews.com/2024/12/ai-could-generate-10000-malware.html