As you saw in my previous blog How Docker Networking Works and the Importance of IPAM Functionality, Docker’s networking model enables 3rd party vendors to ‘plug-in’ enterprise class network solutions. Docker requires the services of an IPAM infrastructure to enable the creation of network address spaces/pools, subnets and the allocation of individual IP addresses for the container-based microservices. In a complex container deployment is important to have a service like Infoblox IPAM to help maintain consistency in a very dynamic multi-host environment dealing with IP address and network creation and deletions. In this paper we examine the details of the Infoblox Docker IPAM driver for specific use cases and including command syntax.

Our focus in this post is around the User Defined Network and more specifically the bridge type. Near the end, we will build a real example of a 3 node cluster configured to share a network.

Network Subnet/Addresses Lifecycle

You can create separate networks for different microservices based applications across multiple-hosts that do not need to interact, therefore isolating the traffic between containers. You can also create a common shared network across multiple hosts of cooperating applications and associated microservices. If each microservice has its own subnet, this can also simplify any security rules used to control traffic between microservices. Alternately, you may have pre-defined networks or VLANs within your environment to which you would like to attach containers. The Docker container networking model (CNM), and the competing Container Network Interface (CNI), enable the creation and management of these networks to serve all of these use cases and more.

In this post, we will focus on Docker, with a later post showing similar functionality using CNI.

In Docker, networks are created using the docker network create command. This can be run manually, or by an orchestrator. In either case, you must specify the IPAM and network drivers to use, and can optionally specify a subnet. For example:

docker network create --driver bridge --ipam-driver infoblox --subnet 10.1.1.0/24 blue

creates a bridge network blue with the specified subnet, using the infoblox IPAM driver (which has previously been started as a container, and has registered itself with the docker daemon via an API – more details below). The IPAM driver is invoked here with a “RequestPool” call to allocate the specified subnet.

Optionally, the user can leave the subnet selection up to the IPAM system:

docker network create --driver bridge --ipam-driver infoblox red

creates a bridge network but does not pass any subnet into the IPAM driver. This allows the IPAM driver to decide on the subnet, using whatever logic or criteria are established by the driver. In our driver, we provide the next available network in this case; meaning, the next subnet of the appropriate size that is available.

Our driver provides options passed at driver startup that anchor these “next available networks” in a larger pool. In Infoblox terminology, this larger pool is called a “network container” (not to be confused with a Docker container). For example, if we started up the driver with Infoblox network container as “10.10.0.0/16” and a default prefix length of “24”, then allocating several networks in a row without the –subnet option would allocate 10.10.0.0/24, 10.10.1.0/24, 10.10.2.0/24, 10.10.3.0/24. If the user manually went into the grid master (GM) user interface and allocated, say, 10.10.4.0/24 and 10.10.5.0/24, then the next Docker network would get allocated as 10.10.6.0/24. This ensures that there is no accidental IP overlap, and allows the network administrator to allocate a large pool (say a /18 or /20) to the container infrastructure, leaving the detailed division of that pool to the application developers. This is a useful feature in organization your address spaces as you plan a large deployment of container application clusters.

The CLI and API also allow the user to specify arbitrary network and IPAM driver options. With our driver, the user can use this to control the prefix length of the subnet:

docker network create --driver bridge \

--ipam-driver infoblox --ipam-opt prefix-length=28 green

creates a bridge network “green” with the “next available” /28 subnet. In this way, you can conserve IPs when creating subnets for specific applications. We also use the IPAM options to provide a way to specify a network by name, rather than forcing you to either specify a subnet, or get the next available subnet. We will use this in the use case below to share a subnet between multiple hosts.

Our IPAM driver must also be able to support the various network drivers by providing parameters to initialize the network containers for local and global address spaces respectively.

At the time of startup of our driver process, the user can specify various parameters: grid connectivity parameters, the network view name, the starting address for the network container (or a comma-separated list) and the default prefix length to be used when doing the “next available network”. The prefix lengths and network containers are specified on a per-address space basis, as you generally will have different size subnets in different address values for local and global spaces. For example, here is a command that starts our driver:

docker run -v /var/run:/var/run -v /run/docker:/run/docker infoblox/ipam-driver \

--plugin-dir /run/docker/plugins --driver-name infoblox \

--grid-host 172.22.128.240 --wapi-port 443 --wapi-username admin \

--wapi-password infoblox --wapi-version 2.3 --ssl-verify false \

--global-view docker-global \

--global-network-container 172.22.192.0/18 --global-prefix-length 25 \

--local-view docker-local \

--local-network-container 10.123.0.0/16 --local-prefix-length 24

This will allocate “global” subnets from 172.22.192.0/18 in network view “docker-global”, with a default prefix length of 25. “Local” subnets will come from 10.123.0.0/16 in network view “docker-local”, with a default prefix length of 24.

The other parameters specify how the Docker daemon communicates with the driver (the –plugin-dir and –driver-name as well as the -v mounts), and the connection parameters for the Infoblox grid.

Finally, when the network is no longer needed, it is deleted with the docker network rm command. At that point, the IPAM driver will receive a ReleasePool API call so that the subnet may be de-allocated.

Container/Address Lifecycle

The IPAM driver is also called to allocate IP addresses on a given Docker network for a microservice container. The gateway address is always allocated right when the network is created. After that, addresses are allocated when an endpoint is attached to a container on a specific network. An “endpoint” is the Docker terminology for a connection between a specific container and a specific network.

An endpoint is created when a container is run and a network is specified. For example,

docker run -it --net blue ubuntu /bin/bash

would create an Ubuntu container with an interface in the “blue” network, and run the /bin/bash command. At that time, the IPAM driver would be called to allocate an IP in the “blue” network via a “RequestAddress” API call.

You may also create an endpoint by attaching an existing container to a network; in that case, the RequestAddress would be made at that time:

docker network connect blue mycontainer

On the tear-down side, you can use the docker network disconnect command, or it will be automatically disconnected when the container exits. This calls a “ReleaseAddress” API on the IPAM driver.

Example: A shared, flat container address space

Now that you have learned the basic commands for our IPAM driver to create networks and allocate IP addresses, let’s expand on how to put it to use. In the previous blog, we discussed the how the macvlan driver can enable containers to have addresses on the external network, but that macvlan is new in Docker 1.12. Here, we demonstrate a simple technique to enable similar functionality using the ‘user-defined’ bridge driver and Infoblox IPAM driver, albeit with some manual intervention.

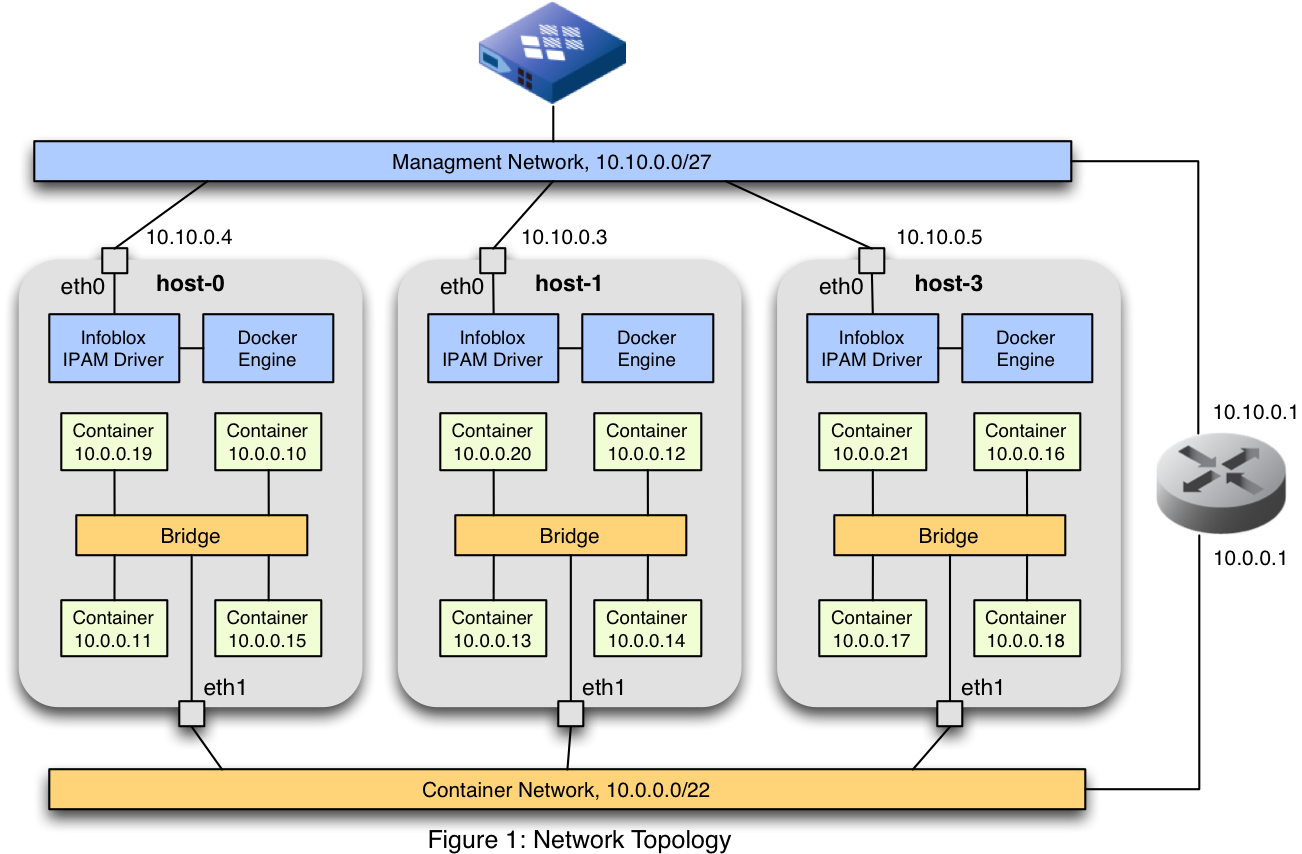

In this scenario, we have three hosts with two NICs each attached to separate physical networks. One NIC we will call the management NIC and it will be eth0 on these hosts, and have an IP address. The second NIC will remain unnumbered, meaning it has no IP associated with it. Instead, it is just used to bridge the internal Linux bridge with the external physical network.

For this example, we will use the logical network topology shown in Figure 1.

Looking at the diagram, notice that each host is attached to the management network, and this is also where you will find the Infoblox appliance. There is an instance of the Docker daemon and the Infoblox IPAM driver running on each host. These two communicate with one another via a Unix domain socket, and the IPAM driver communicates with the Infoblox appliance via HTTPS over the management network. Also on each host is a bridge – this is the bridge that is created by the docker network create command, which we will see how to do below. Additionally, we have added the eth1 interfaces to these bridges. Those interfaces are connected to another network, the container network. The host NICs themselves do not have IP addresses, nor does the bridge.

Once this environment is completely configured, the parts shown in orange above will all constitute a single L2 broadcast domain. When a container puts a broadcast frame (say, an ARP request) on its local Linux bridge, the bridge will push that frame to all containers on the local host, as well as out the eth1 interface onto the external network. From there it will be delivered to the other hosts and in turn their containers. This means that traffic is allowed out the eth1 interfaces from any container to any other container.

We also need a common L3 subnet to make the connectivity fully functional. This is where our IPAM driver comes in. Rather than independently allocating subnets, we use a driver-specific –ipam-opt flag (“network-name”) to tell the driver to use the same subnet on each host. The subnet is tagged in Infoblox with an extensible attribute corresponding to this value, allowing the driver to search Infoblox for the requested, named subnet. We’ll see this in more detail below.

Let’s look at the steps needed to set this up. First, of course, we have to set up the physical (or virtual) networking of the hosts and the Infoblox appliance, and get Docker running on the hosts. In my example, we deployed a three-node CoreOS cluster on OpenStack, using VMs for the hosts. CoreOS already has Docker installed and running. We also ran a VM version of the Infoblox appliance in the same cloud. The specific cloud provider or physical infrastructure isn’t critical, though different providers and infrastructure will need to be configured in different ways. For OpenStack, the Heat templates and scripts used may be found at https://github.com/infobloxopen/engcloud/tree/master/mddi if you wish to duplicate the enviornment.

Once we have the basic network topology described above, we need to finish the picture by instantiating the Infoblox IPAM driver on each host, creating the appropriate bridges via docker network create, and running some containers to test it out.

To run the IPAM driver, you log into the first host and simply run this command (modifying the Infoblox connectivity parameters for your environment):

docker run --detach -v /var/run:/var/run -v /run/docker:/run/docker \

infoblox/ipam-driver --grid-host mddi-gm.engcloud.infoblox.com \

--wapi-username admin --wapi-password infoblox \

--local-view default --local-network-container "10.0.0.0/8" \

--local-prefix-length 22

This will execute the containerized version of the IPAM driver. We don’t bother to specify the global view and other parameters, since we aren’t using them in this exercise. After this runs, we can check the logs to make sure the container came up properly:

core@host-0 ~ $ docker run --detach ... (the command above) ...

a4a5345059679226e0e769efbe7677223a7d07b91669e838145f5be639aaa579

core@host-0 ~ $ docker logs a4a5

2016/07/13 17:51:42 Created Plugin Directory: '/run/docker/plugins'

2016/07/13 17:51:42 Driver Name: 'infoblox'

2016/07/13 17:51:42 Socket File: '/run/docker/plugins/infoblox.sock'

2016/07/13 17:51:42 Docker id is '6EDV:6ZSN:DA4P:MS3B:PNWH:IL7B:JKI2:EZQY:NSAT:FXMV:SSPO:4QNA'

core@host-0 ~ $

Next, let’s create the docker network. We are going to create a bridge network, which is normally considered to be a “host local” network. That is, it can communicate outbound via NAT, but no inbound traffic is possible without port mappings. In our case, we will use a few extra commands to allow containers on the bridge to communicate directly with containers on other hosts. The command

docker network create --ipam-driver infoblox \

--ipam-opt network-name=container-net \

--driver bridge \

--opt com.docker.network.bridge.name=container-net \

container-net

instantiates a new bridge on this host (–driver bridge). The option passed via –opt allows us to name the bridge, making future commands a little easier. The –ipam-driver option is what tells Docker to use the Infoblox IPAM driver, which has already registered itself with the Docker daemon. And the –ipam-opt is used by the driver to choose the right subnet. It will look for a network in Infoblox with the specified name; if one is not found, it will allocate it. Otherwise, it will re-use that subnet. This option changes the “next available network” behavior that would normally be associated with the network create command without a –subnet option.

Let’s look at the output of the host ip addr command after running this:

core@host-0 ~ $ ip addr

[...snip irrelevant lines...]

8: container-net: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:71:3a:0f:5f brd ff:ff:ff:ff:ff:ff

inet 10.0.0.1/22 scope global container-net

valid_lft forever preferred_lft forever

core@host-0 ~ $

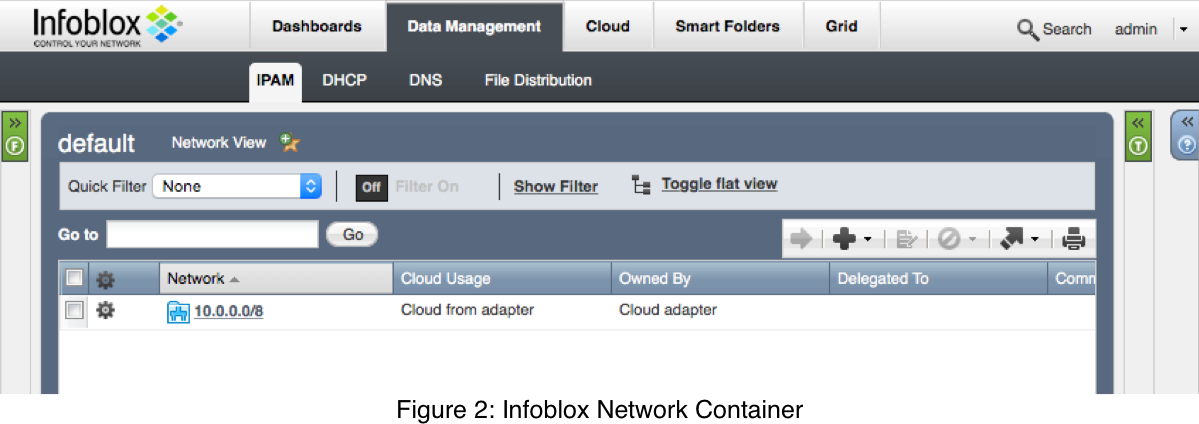

A bridge container-net has been created, and it has been given the IP 10.0.0.1. Looking back in Infoblox in Figure 2, we see that the network container was created.

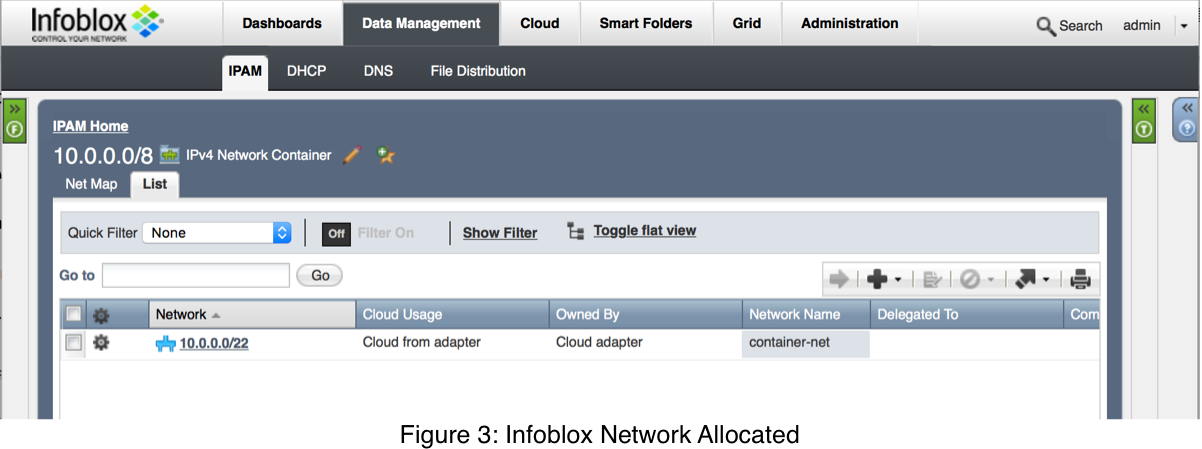

Drilling into that in Figure 3, we can see that a subnet was allocated, and the “Network Name” extensible attribute was assigned our “container-net” name.

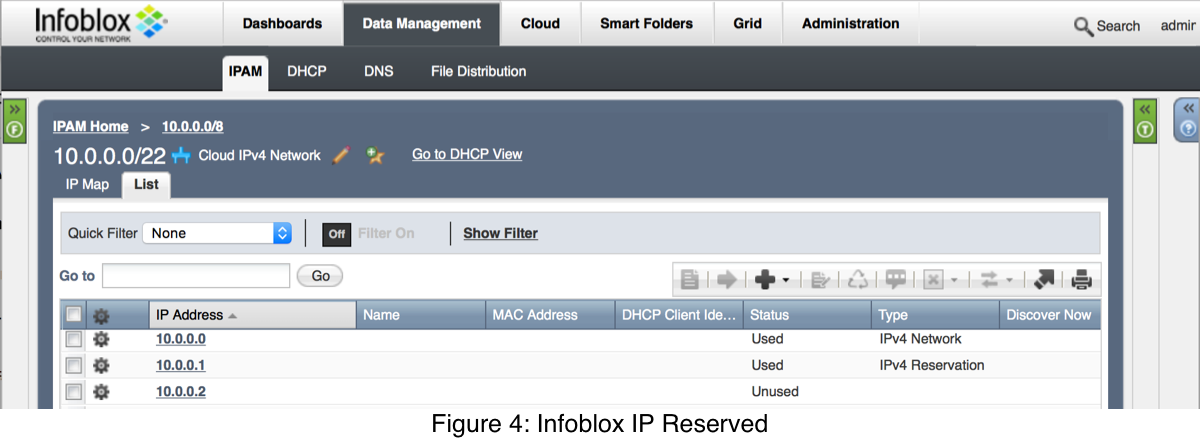

Finally, looking at Figure 4, we see that the bridge IP address 10.0.0.1 has been allocated.

This is a problem. We have an infrastructure router already with address 10.0.0.1. We don’t even really want the bridge to have an IP – ideally we would be able to tell Docker not to give it one – and just tell it to use 10.0.0.1 as the default gateway for all of the containers. However, the bridge driver does not offer that level of configuration – we’ll have to wait for the macvlan driver for that.

The IPAM driver works around this issue by always returning the same IP for the same MAC; it happens that when Docker requests an IP for the gateway, it passes always MAC 00:00:00:00:00. So, as long as we have reserved that IP in Infoblox without a MAC (a “Reservation” not a “Fixed Address”), the driver will end up returning the reserved router IP. Then, we can just remove this IP from the bridge; the containers will be configured by Docker to have the right gateway since it still sees 10.0.0.1 as the gateway address:

core@host-0 ~ $ sudo ip addr del 10.0.0.1/22 dev container-net

We could have just let Docker allocate an IP for the bridge (that is, have the IPAM driver return a new IP even though MAC 00:00:00:00:00 already has one). However, this is more of an issue than it sounds. In that case, a container’s default route will point to the bridge IP, any traffic bound for the a network other than 10.0.0.0/22 will be routed through the host network namespace, and out the host management interface, rather than via 10.0.0.1 on eth1. This means that services outside of 10.0.0.0/22 will see the requests coming from the NAT’d host IPs, rather than from the original container IP.

Now let’s launch a container and try to ping the infrastructure router at 10.0.0.1:

core@host-0 ~ $ docker run -it --net container-net alpine sh

/ # ping 10.0.0.1

PING 10.0.0.1 (10.0.0.1): 56 data bytes

^C

--- 10.0.0.1 ping statistics ---

7 packets transmitted, 0 packets received, 100% packet loss

/ #

So, what went wrong? Why couldn’t we ping the external router? Well, we forgot to add eth1 to the bridge. Let’s try that now by running the following command to add the eth1 interface to the container-net bridge:

/ # exit

core@host-0 ~ $ sudo brctl addif container-net eth1

This “plugs” the eth1 interface into the container-net bridge, thus connecting the bridge the outside network. Let’s try that ping again:

core@host-0 ~ $ docker run -it --net container-net alpine sh

/ # ping 10.0.0.1

PING 10.0.0.1 (10.0.0.1): 56 data bytes

64 bytes from 10.0.0.1: seq=0 ttl=64 time=1.877 ms

64 bytes from 10.0.0.1: seq=1 ttl=64 time=0.482 ms

^C

--- 10.0.0.1 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.482/1.179/1.877 ms

/ #

And there we have it! The container attached to the internal bridge can talk to the outside router.

Now, let’s do the same thing on our other two hosts by running the same commands. Open separate ssh sessions to each host, and execute these commands:

docker run --detach -v /var/run:/var/run -v /run/docker:/run/docker \

infoblox/ipam-driver --grid-host mddi-gm.engcloud.infoblox.com \

--wapi-username admin --wapi-password infoblox \

--local-view default --local-network-container "10.0.0.0/8" \

--local-prefix-length 22

docker network create --ipam-driver infoblox \

--ipam-opt network-name=container-net \

--driver bridge \

--opt com.docker.network.bridge.name=container-net \

container-net

sudo ip addr del 10.0.0.1/22 dev container-net

sudo brctl addif container-net eth1

docker run -it --net container-net alpine sh

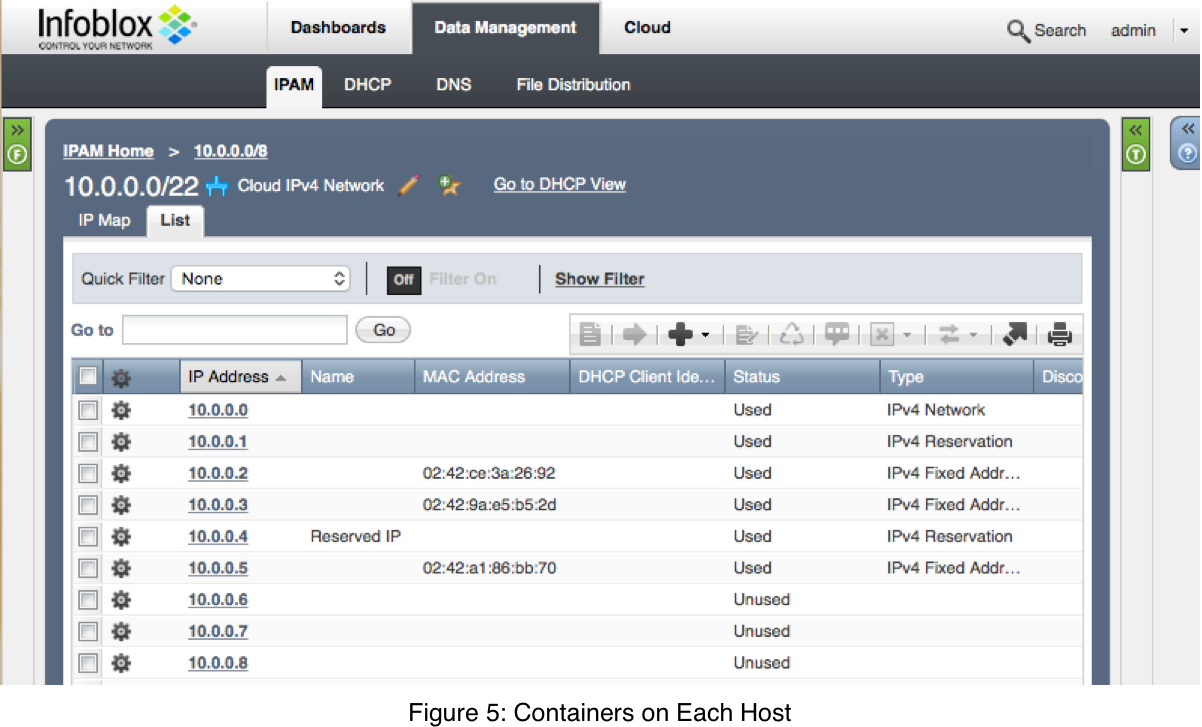

You should now see the “/ #” prompt for the alpine container on each host. If you look back in the Infoblox appliance, you will see something like Figure 5, showing the different allocated IPs and the associated container MAC addresses.

For demonstration purposes, I reserved an IP address (“Reserved IP”) via the Infoblox UI before running the container on the third host. When Docker requests the next IP, Infoblox skips that IP.

On one of the hosts, run the ip addr command to get the IP address (you can also see that the MAC matches the one in Figure 5):

/ # ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

11: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:9a:e5:b5:2d brd ff:ff:ff:ff:ff:ff

inet 10.0.0.3/22 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::42:9aff:fee5:b52d/64 scope link

valid_lft forever preferred_lft forever

/ #

Then, let’s listen on a network socket in that same container with:

/ # nc -l -p 2000

Switching back to another host, you can see the cross-host networking working by using telnet to connect to the specific host and port above:

/ # telnet 10.0.0.3 2000

Hi from host-0!

Back on the host running nc, we see the message show up:

/ # nc -l -p 2000

Hi from host-0!

Conclusion

In this post, you learned about the basic Docker networking commands, and how the use of Docker network commands improves the integration of networking within the Docker infrastructure. Furthermore you can see how the use of an external, centralized IPAM can increase the flexibility of your Docker networking solution and enable cross-host networking without the complexity and performance concerns of overlays.

One thing that would make the external IPAM even more useful would be for the Infoblox driver to capture information about the Docker containers that own each IP – for example, the host name, container name and other meta-data. Unfortunately right now this is only possible to a limited extent, because the Docker IPAM interface does not pass the information needed. We have submitted a pull request to Docker (https://github.com/docker/libnetwork/pull/977) to address this, and hope to see that added. In a future version of the driver, we will work around this issue to get some of the information by querying the Docker API using the MAC address. This will work for container-specific information, but not for network information (at least, not efficiently).

In the next post, we will learn about the alternate networking stack for containers, the Container Network Interface (CNI), and see how we can achieve a similar result with that.