In part 2 of Turbocharge your Infoblox RESTful API calls series, we discuss concurrency, its pros and cons, how it can be implemented to speed up automation scripts.

OVERVIEW

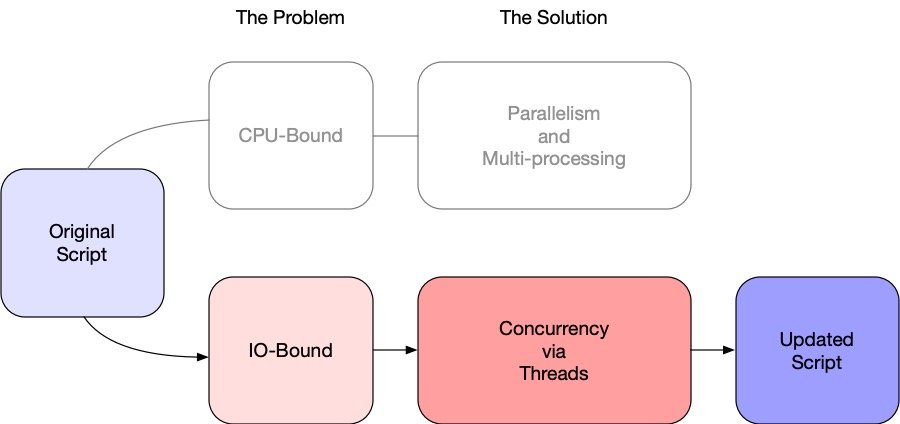

In this second article of the Turbocharge your Infoblox RESTful API calls series, we’ll take our synchronous Infoblox WAPI automation script and demonstrate how we can improve it by using concurrency to make it run faster. In this article we discuss what concurrency is, the pros and cons for using it in development, and how to implement it in our automation scripts. Recall that at a high level, scripts can be CPU-bound and/or IO-bound. Scripts that are IO-bound are best refactored using concurrency techniques while CPU-bound scripts are best developed to take advantage of parallelism. Since our script is IO-bound (in the sense it has to wait for the network), we’ll focus on concurrency in this second article.

The code used in development of this article series is accessible at https://github.com/ddiguru/turbocharge_infoblox_api

Concurrency

Concurrency is defined as simultaneous occurrence. Python scripts accessing the Infoblox WAPI can be written to take advantage of concurrency through the use of threads, tasks, and processes. At a high level, they all refer to a sequence of instructions that run in that order. Threads, tasks and processes can be stopped at certain points of execution, and a computer’s CPU that is processing them can switch to a different one. The state of each one is saved so that it can be restarted, picking up where it left off. In this article, we focus on the use of Python threads.

Characteristics of Python Threads:

- A Python thread runs on a single processor and therefore only run one at a time

- multiple threads w/ the CPU doing context switching between threads makes code run faster

- the operating system knows about each thread and can interrupt at any time, i.e. pre-emptive multitasking

- Python threads run as sub-processes sharing the same global memory space

Concurrency can provide a modest improvement to our original script, since it encounters some degree of I/O wait between each WAPI call to create a network. We will start with the original wapi-network.py script and convert it to use Python Threads. As you probably guessed, re-writing our program to use threads will take some additional effort. When you add threading, the overall structure is the same and you only need to make a few changes.

We will use Python’s concurrent.futures library to create a function or method which uses a list of items, our networks, to work on. The function will create a pool of 16 threads which will independently call an insert_network function. Each of the 16 threads in our script will appear to run in parallel, but instead, they will be controlled by the ThreadPoolExecutor, a part of the concurrent.futures library. The easiest way to create it is as a context manager, using the with statement to manage the creation and destruction of the pool. We’ll improve our original script first by importing the required module concurrent.futures, then we’ll create the following runner function:

def runner(networks):

threads = []

with ThreadPoolExecutor(max_workers=16) as executor:

for network in networks:

threads.append(executor.submit(insert_network, str(network)))

for task in as_completed(threads):

print(task.result())

if task.result() == 201:

logger.info('thread successfully inserted network')

else:

logger.warning('thread failed to insert network')Create a discrete function to insert a network object via the Infoblox WAPI as follows:

def insert_network(network):

payload = dict(network=network, comment='test-network')

try:

res = s.post(f'{url}/network', data=json.dumps(payload), verify=False)

return res.status_code

except Exception as e:

return eHere is the full listing to our new and improved script – wapi-threaded.py

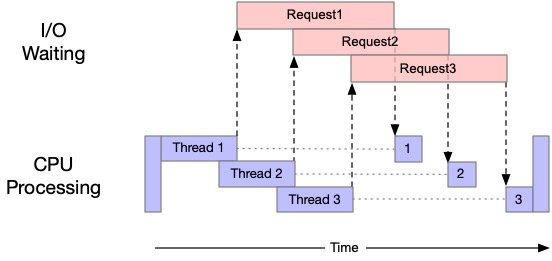

The following graphically depicts how the script would execute, showing a much larger number of requests appearing to be simultaneously launched, with results being handled and returned as they are completed.

The example in this article is pretty simple and straight forward. Developing scripts in Python to use concurrency via threads can be much more difficult depending on the tasks. Complex business logic will likely require you to consider locking, race conditions, and/or synchronization. It is beyond the scope of this article series to delve into all the gory details of concurrent programming using Python threads.

Testing Results

We tested our original script wapi-network.py against the new and improved threaded script wapi-threaded.py using the same environment used in Part 1 of the series. Both scripts were tested both with and without HTTP Keepalive enabled on the Grid Master. The results without HTTP Keepalive (in seconds) are shown in the table below:

| Test w/o HTTP Keepalive | Pass #1 | Pass #2 | Pass #3 |

|---|---|---|---|

| wapi-network.py | 39.88 | 38.31 | 38.87 |

| wapi-threaded.py | 25.98.81 | 24.81 | 24.57 |

| % improvement | 34.85% | 25.24% | 36.79% |

The Results with HTTP Keepalive (in seconds) are shown in the table below:

| Test w/ HTTP Keepalive | Pass #1 | Pass #2 | Pass #3 |

|---|---|---|---|

| wapi-network.py | 33.85 | 33.64 | 33.33 |

| wapi-threaded.py | 19.47 | 19.57 | 20.28 |

| % improvement | 42.48% | 41.83% | 39.16% |

As shown in the above tables, the refactored wapi-threaded.py script using Python Threads, dramatically outperforms our original wapi-network.py script by a wide margin both with and without HTTP Keepalive. The wapi-threaded.py script (with Keepalive) executes in about 1/2 the time as our wapi-network.py script (without Keepalive). Again, we’re able to achieve between 20% to 25% improvement in the performance of our new script just by enabling HTTP Keepalive.

NOTE: As with all the examples in this series – there is a time and place for writing scripts to make use of Python threads to leverage concurrency, which turbocharges the performance of our script. The script in this article is a simple single tasking script and was easy to implement. Generally, you will have more complicated business logic that will make using threads more complex. It is not a panacea – but it does solve the problem of concurrency!

Conclusion

In summary, we show again just how vital HTTP Keepalive can be to turbocharging our automation scripts that utilize the Infoblox WAPI. We refactored our original WAPI script to make use of Python threads, giving us an impressive 40% boost in performance. We strongly recommend you consider using Python threads to make your scripts take advantage of concurrency. The caveat is you will have to figure out the optimal number of threads to use, as well as, potentially handle more complex business logic and code as a result. In the next article, Turbocharge your Infoblox RESTful API calls (part 3), we’ll look at a powerful WAPI object called the request object which can be used to batch create our list of Networks using a single WAPI POST call.