There’s a heightened interest in Docker networking and IPAM content, so we’re going to look at rkt/CNI and how Infoblox can also be leveraged to provide centralized IPAM functions in a multi-host environment.

Like Docker, rkt is an open-source project that provides a runtime environment for deploying software applications in the form of containers (https://coreos.com/rkt). In the case of rkt, the networking of containers is provided by Container Networking Interface (CNI) (https://github.com/containernetworking/cni/blob/master/README.md).

In this blog, I am going to take you through a typical deployment use case for Rkt/CNI. But, before we do that, I’m going to provide some background on rkt and CNI.

Background

rkt (or, Rocket, as it is originally called) is started as part of the CoreOS project which implements a container runtime environment conforming to the App Container (appc) as well as the Application Container Image (ACI) specifications. This is in support of the Open Container Initiative to define a vendor and operating system neutral containerization standard.

As for CNI, it is the generic plugin-based networking layer for supporting container runtime environments, of which rkt is one. Indeed, the design of CNI is initially derived from the rkt Networking Proposal.

In CNI, a network is a group of entities that are uniquely addressable that can communicate with each other. An entity could be a container, a host or a network device. In this blog, we’ll be focusing on rkt containers, which can be added or removed from one or more networks as they are being launched and deleted by rkt.

CNI Network Configuration

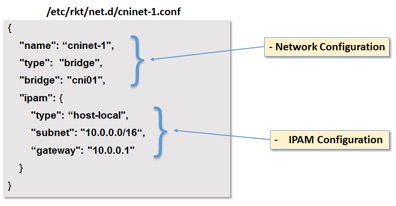

In CNI, the configuration of a network is defined using a JSON formatted configuration file. In addition to well-known fields, it also includes optional fields as well as plugin specific fields. Here’s an example network configuration file:

Figure 1: Example Network Configuration File

For a complete description of all the configuration fields, refer to: https://github.com/containernetworking/cni/blob/master/SPEC.md#network-configuration

In the meantime, let’s look at the fields included in the example above.

- name – Network name

This is the name of the network to which the configuration applies. In an rkt deployment, the network name is specified as part of the rkt run command used to launch a container.

- type – Network type

This defines the network type. In fact, this is the filename of the plugin executable that is invoked to create/remove the network.

The following type of networks are supported out of the box:

- bridge

- ipvlan

- Macvlan

- bridge – Name of network bridge

This is the name of the bridge created to host the network. Obviously, this a plugin specific field that applies only for the bridge network type.

Following that is a dictionary of the ipam specific configurations:

- type – IPAM plugin type

As in the case for network type, this is the filename of the IPAM plugin executable that is invoked for IP allocation/deallocation.

- subnet

- gateway – These are IPAM configurations, which may be optional depending on the specific IPAM plugin in use. For example, subnet is a mandatory field for the host-local ipam plugin, while it is optional for the infoblox plugin as we shall see later.

CNI Plugin

As we discussed in the previous section, the Network type and IPAM plugin type defined in the network configuration file actually specify executables that get run when required. The network plugin is responsible for the creation of network interface for the container as well as making any necessary networking changes to enable the plumbing. IP address is then assigned to network interface, as well as routes and gateways being set up based on the results obtained by invoking the IPAM plugin.

When a plugin is invoked, it receives the entire network configuration file via the standard input (stdin) stream. The plugin therefore has access to all the configuration attributes defined in the configuration file. The plugin returns the result in JSON format via the standard output (stdout) stream.

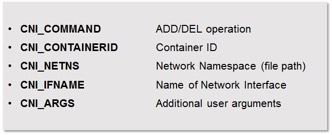

In addition to the configuration details, context information is also made available to the plugin as environment variables. This include:

Figure 2: CNI Environment Variables

- CNI_COMMAND – This defines the operation, which can either be “ADD” or “DEL”.

- CNI_CONTAINERID – This is a unique ID. If the request is associated with launching a rkt container, this is the container ID.

- CNI_NETNS – Network namespace in which the network interface resides

- CNI_IFNAME – The name of the network interface.

- CNI_ARGS – This contains additional arguments, if any, specified by user.

Infoblox IPAM Plugin and Daemon

Before we go into a deployment example using the Infoblox IPAM plugin, let’s discuss how the plugin works in more detail.

As mentioned above, in CNI, a plugin is simply an executable file that gets run as needed. When run, it is passed configuration via stdin and context information as environment variables. After processing based on the input provided, the plugin then returns the result in JSON format via stdout.

In our implementation we divide the functions into two components:

- Infoblox IPAM Plugin

This is the plugin that is executed by CNI just like any other plugin, and receives the configuration and context data. However, instead of directly interfacing with Infoblox to perform the IPAM functions, it only packages the input data and send it to the Infoblox IPAM Daemon. On receiving the results from the Daemon, the plugin returns the results to CNI.

- Infoblox IPAM Daemon

The Infoblox IPAM Daemon is the component that actually does the heavy lifting and interfaces with Infoblox via WAPI to perform the IPAM functions. The daemon is typically deployed as a container on each rkt host. The introduction of this daemon component is primarily for performance reasons:

- Running the daemon as a resident process allows WAPI connections to be kept alive and reused, thereby significantly improve response time.

- Making the plugin executable as small as possible to minimize startup cost

Infoblox IPAM Plugin Configuration

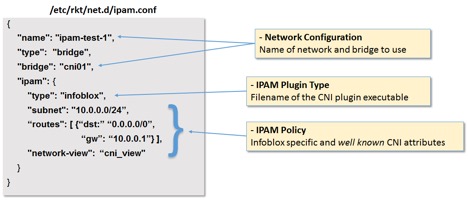

To use the Infoblox IPAM plugin, we first need to put the plugin executable, infoblox, in the /usr/lib/rkt/plugins/net directory where CNI looks for plugins by default. Then, we need to create a configuration file in /etc/rkt/net.d for the network that we are going to create. The name of the configuration file is not important – it just needs to be unique. However, the key is that the “name” field needs to match the name of the network we want to use. Suppose the name of the network is ipam-test-1, we therefore create a network configuration file called ipam.conf in /etc/rkt/net.d that looks like this:

Figure 3: IPAM Configuration for Example Multi-host Deployment

Since we’ll be using this configuration file for our example multi-host deployment, let’s explore the fields one by one:

- name – This is the name of the network to which this configuration applies. In this case, the name of the network we’re creating is ipam-test-1

- type – The network type we are going to use is bridge.

- bridge – This defines the name of the bridge that will be used for the network, in our case, cni01. CNI will create the bridge if necessary, or, it will use an existing one of the same name. For our example, the bridge will be created manually with the necessary network interface attached to support the deployment.

For the ipam configurations:

- type – Specifying infoblox causes CNI to execute the infoblox plugin executable that is located in /usr/lib/rkt/plugin/net directory.

- subnet – Here, we specify the CIDR for the subnet to be 10.0.0.0/24

- routes – Here, we specify the default route to be 10.0.0.1. Currently, the IPAM plugin does not make use of this field. This field is simply returned back as part of the IPAM plugin results, which is then injected into the container to configure the routing table.

- network-view – While the previous fields are well-known CNI configurations, the network-view field is an Infoblox plugin specific configuration. Here, we specify that the Infoblox objects will be created under a network view called cni_view. If the network view does not already exist, it will be created automatically.

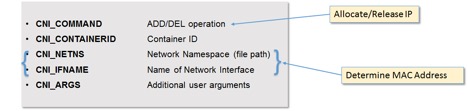

As we mentioned earlier, when the plugin is executed, the content of the above configuration is passed to it as stdin. We also mentioned that, in addition to that, context information is also made available to the plugin as environment variables. Let’s take a quick look at this.

Figure 4: CNI Environment Variables consumed by IPAM Plugin

- CNI_COMMAND – This can either be “ADD” or “DEL”, indicating to the plugin whether it is a request to “Allocate” or “Release” IP respectively.

- CNI_CONTAINERID – This is an unique ID, and if the request is associated with launching an rkt container, this is the container ID. This is used to populate the “VM ID” extensible attributes of the associated Infoblox Fixed IP Address object.

- CNI_NETNS

- CNI_IFNAME – These are the network namespace and the name of the network interface, respectively, associated with the request. These two fields are used by the plugin to determine the MAC address of the network interface, which is then sent to the IPAM daemon together with the other fields.

- CNI_ARGS – This contains additional arguments, if any, specified by user. This is currently not used by the Infoblox plugin.

The responsibility of the plugin is simply to package up the network configuration and the CNI environment information and send it to the IPAM daemon – which we’re going to look at next.

Infoblox IPAM Daemon Configuration

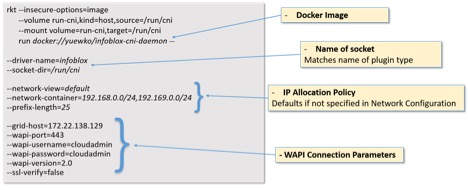

The IPAM Daemon is the component that interfaces with Infoblox via WAPI to perform the IPAM functions. The daemon is typically deployed as a container. In our example, we are using a Docker image of the daemon, and is started via rkt run as follow:

Figure 5: IPAM Daemon command parameters

The important parameters are those that define the IP allocation policy. If you recall, we have specified the following in the CNI network configuration file:

“network-view”: “cni_view”,

“subnet”: “10.0.0.0/24”

Any settings that are specified in the network configuration file will take precedence. So, in our example, the IPAM daemon would request Infoblox to allocate an IP in the “10.0.0.0/24” subnet. The IPAM daemon also ensures that the “10.0.0.0/24” has been created in the network view “cni_view”.

On the other hand, should “network-view” and “subnet” not have been specified in the network configuration file, then a subnet will be allocated based on the network-view, network-container and prefix-length command line parameter – which in this case is “default”, “192.168.0.0/24,192.169.0.0/24” and 25 respectively.

A Multi-host rkt Deployment

In this scenario, we are going to simulate a container based application that is deployed across multiple hosts, and in which the containers need to communicate with each other. We need to create a network model that supports that within the constructs of rkt/CNI.

While CNI can provide the necessary cross-host L2 connectivity needed for the containers, the challenge becomes how to manage IP addresses of the containers across the hosts, e.g. how to avoid allocating duplicated IP addresses. This calls for a centralized IPAM solution that is capable of provisioning IP across the hosts in a holistic manner.

In this example, Infoblox is leveraged to provide the centralized IPAM functionality. To facilitate this, we utilize the Infoblox IPAM plugin to delegate IP allocation and de-allocation to Infoblox.

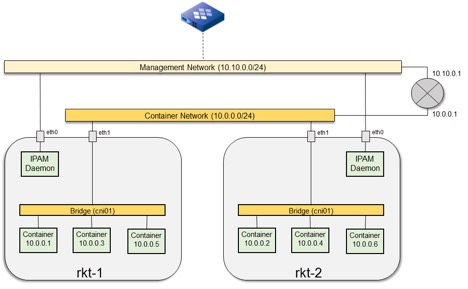

Figure 6: Multi-host rkt/CNI Deployment

The setup consists of two identically configured VMs that serve as rkt hosts, rkt-1 and rkt-2. Each rkt host is a VM that runs an Ubuntu 14.04 image with rkt runtime environment installed.

On each host, a bridge (cni01) is created – all containers launched will be attached to this bridge. Also attached to the cni01 bridge is eth1, which is a network interface connected to the Container Network. In Ubuntu, the brctl command is used to manage linux bridges:

brctl addbr cni01 # creates cni01 bridge interface

brctl addif cni01 eth1 # attaches eth1 to the cni01 interface

Container Network is a vSwitch that provides L2 connectivity between the rkt hosts. Overall, this establishes L2 connectivity between all the containers attached to the cni01 bridges.

Also created on each host is the eth0 network interface. This is attached to the Management Network through which the IPAM Daemon talks to Infoblox services. I’ll talk about this in the next section.

A CNI network called ipam-test-1 can now be setup that uses the Infoblox IPAM plugin. The network is served by the cni01 bridge we discussed earlier that provide L2 connectivity across the hosts.

Verifying the Configuration

First of all, verify that the necessary configurations are in place:

- Verify that the network configuration file ipam.conf is located in /etc/rkt/net.d

- Verify that the plugin executable infoblox is located in /usr/lib/rkt/plugins/net

- Verify that the docker image for the IPAM daemon exists by running the command docker images

The image is called infoblox-cni-daemon, for example:

REPOSITORY TAG IMAGE ID CREATED SIZE

yuewko/infoblox-cni-daemon latest a7cd28f86339 11 days ago 130.7 MB

- Verify that eth1 is attached to the cni01 bridge. Run the command,

brctl show cni01

The output should look something like:

bridge name bridge id STP enabled interfaces

cni01 8000.000c29f878b0 no eth1

Note that an IP has not been assigned to the bridge interface:

ifconfig cni01

cni01 Link encap:Ethernet HWaddr 00:0c:29:f8:78:b0

inet6 addr: fe80::20c:29ff:fef8:78b0/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:82 errors:0 dropped:0 overruns:0 frame:0

TX packets:97 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:7111 (7.1 KB) TX bytes:11710 (11.7 KB)

Instead, we configured the default gateway IP of 10.0.0.1 in the network configuration file ipam.conf (see Figure 3), which is the IP for the router interface (see Figure 6). Since we are not using the bridge interface as a gateway, there is no need to assign an IP for it. More importantly, this allow us to use the same default gateway configuration for all the rkt hosts.

This is only possible because the CNI bridge plugin allows fine grained gateway configuration, via the following fields:

- isGateway – Assigns an IP address to the bridge (Defaults to false).

- isDefaultGateway – Sets isGateway to true and makes the assigned IP the default route. (Defaults to false)

By not specifying those fields in our CNI network configuration, and therefore assuming the default values, CNI does not assign an IP for the bridge interface. Additionally, it honors the routes settings in the ipam configuration to establish the default route in the container.

Finally, start the IPAM daemon using the rkt run command shown earlier in Figure 5. Obviously, you would need to modify the WAPI connection parameters applicable to your environment.

Putting it to the test

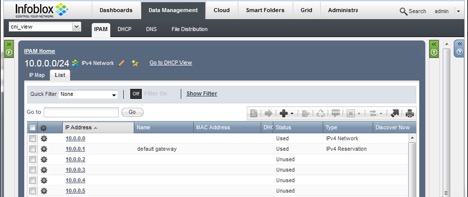

First of all, recall that the IP 10.0.0.1 has been assigned to the router interface and is configured to be the default gateway for the containers. In order for Infoblox not to use the IP to serve allocation request, the IP need to be reserved, as shown:

Figure 7: Default Gateway IP Reserved

Next, we are going to launch containers on both rkt hosts and demonstrate that there is no IP collision and that connectivity can be established between the containers across the hosts.

On the rkt-1 host, use the rkt run command to start a ubuntu container specifying the network ipam-test-1, as follows:

rkt run –interactive –net=ipam-test-1 sha512-82b5a101e8bf

When the command completes and display a shell prompt, we are actually inside the ubuntu container. Running the command ifconfig eth0 produces the following output:

eth0 Link encap:Ethernet HWaddr ce:60:bb:50:f6:b4

inet addr:10.0.0.2 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::cc60:bbff:fe50:f6b4/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:21 errors:0 dropped:0 overruns:0 frame:0

TX packets:7 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:3615 (3.6 KB) TX bytes:578 (578.0 B)

where eth0 is the interface attached to the ipam-test-1 network. Note the highlighted MAC address of the interface, and more importantly, the IP address 10.0.0.2 that has been allocated.

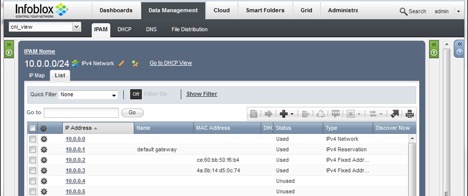

Additionally, we can verify this by logging onto the Infoblox management interface:

Figure 8: IP Allocated to container on rkt-1

As shown above, the IP address 10.0.0.2 has been allocated to the MAC address of eth0.

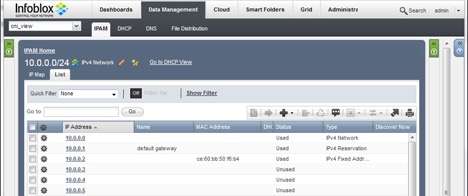

Next, we repeat the same to launch a ubuntu container on rkt-2. In this case, running the ifconfig eth0 command inside the container produces:

eth0 Link encap:Ethernet HWaddr 4a:8b:14:d5:0c:74

inet addr:10.0.0.3 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::488b:14ff:fed5:c74/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:16 errors:0 dropped:0 overruns:0 frame:0

TX packets:6 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2528 (2.5 KB) TX bytes:508 (508.0 B)

Again, we can correlate that with the Infoblox management interface:

Figure 9: IP Allocated to container on rkt-1

More interestingly, from inside the ubuntu container on rkt-2, we can ping the IP (10.0.0.2) of the container on rkt-1:

root@rkt-1649dade-d9b8-4cec-be9f-eadf9c865de5:/# ping 10.0.0.2

PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data.

64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=0.260 ms

64 bytes from 10.0.0.2: icmp_seq=2 ttl=64 time=0.171 ms

64 bytes from 10.0.0.2: icmp_seq=3 ttl=64 time=0.216 ms

64 bytes from 10.0.0.2: icmp_seq=4 ttl=64 time=0.244 ms

^C

— 10.0.0.2 ping statistics —

4 packets transmitted, 4 received, 0% packet loss, time 2997ms

rtt min/avg/max/mdev = 0.171/0.222/0.260/0.038 ms

And, lastly, we can verify the expected MAC address using the arp command:

root@rkt-1649dade-d9b8-4cec-be9f-eadf9c865de5:/# arp -an

? (10.0.0.2) at ce:60:bb:50:f6:b4 [ether] on eth0

Summary

In this blog, we have demonstrated that using the IPAM plugin allows us to easily leverage Infoblox to provide centralized IPAM service in a multi-host rkt container deployment environment. In a follow-on blog, we’ll compare and contrast the features and workflow of CNI and libnetwork.