One notable outcome of the Covid-19 pandemic is the impact of digital transformation and network migration to cloud-native architectures. By 2022, Gartner predicts more than 75% of global organizations will be running containerized applications in production, a substantial increase from less than 30% today. Michael Warrilow, research vice-president at Gartner, says, “Understanding of ‘cloud-native’ varies, but it has significant potential benefits over traditional, monolithic application design, such as scalability, elasticity and agility. It is also strongly associated with the use of containers.” (Source: https://www.computerweekly.com/feature/Containers-for-a-post-pandemic-IT-architecture)

The analyst’s figures are reflected in the latest Red Hat Enterprise Open-Source Report (2021), which shows container adoption is already widespread. Of the 1,250 IT leaders surveyed, just under 50% said they use containers in production to at least some degree. A further 37% use containers for development only, while only 16% are still evaluating or researching container adoption, according to Red Hat.

“Containers are not a nascent trend any longer,” says Red Hat technology evangelist Gordon Haff. “In this past year, we have seen a lot of companies go to containers.” So, what exactly are some of the key factors to consider with containers and networking?

Container Networking Considerations

-

Complexity

Running multiple container environments (e.g., on-premises, and in private, public and hybrid cloud environments) adds complexity, making it more difficult to manage. Running containers in production can be a massive effort. For example, a single application can have 1000s of containers and there can be many such applications all running in production. Apps deployed in multiple environments generate substantially more IPs to manage than in traditional deployments.

-

Dynamic Scalability

Unlike traditional IP networking, container-based networking is dynamic and highly scalable. Nothing is static. Configurations are constantly spinning up and down which creates some interesting operational challenges.

-

Microservices Communications

Another key challenge with containerized networking is communication between the various microservices running on them. For example, containers need to communicate with other containers, some of which are internal only while others are external, and so on.

-

Multiple Environments

Many organizations deploy container environments on-premises and across multiple cloud providers, and each of these providers define their own networking policies. This requires additional consideration to successfully navigate disparate environments.

-

Security

Statically allocated IPs and ports won’t work in a container environment, which makes network security more involved. There are also increased runtime security risks such as crypto currency mining that creates additional security exposure.

-

Visibility and Monitoring Challenges

With multi-platforms running multi-apps and a myriad of IPs, there are bound to be visibility and monitoring challenges. It is extremely difficult to have a clear picture of all the apps, associated containers, and keep track of container health.

Kubernetes – Is it the Complete Solution?

Kubernetes can solve many of the networking issues that come with containers, but does it really address all the challenges? If not, where does it fall short?

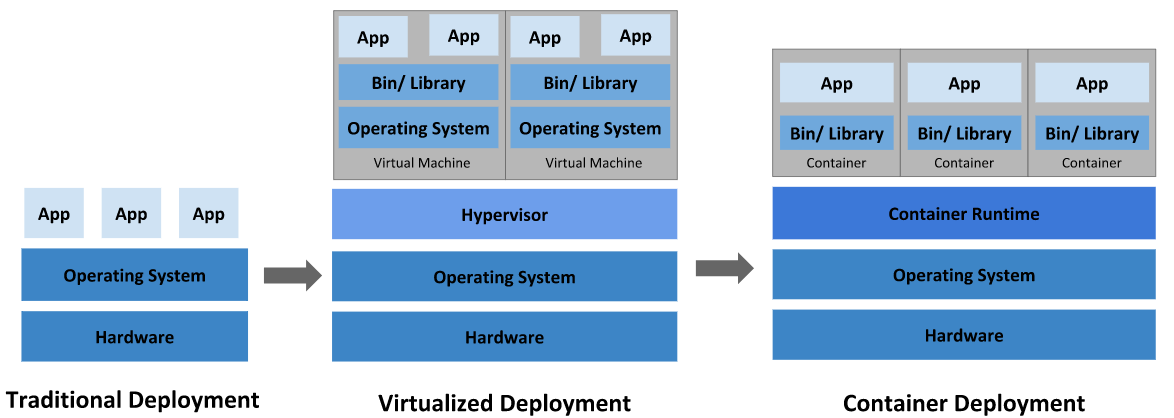

Traditional, Virtualized and Container Deployment Models

(Source: Kubernetes)

In general, Kubernetes simplifies container networking. Containers are grouped into pods, each with a shared namespace. All containers in a pod have the same port, IP address and port space. Containers within a pod can use localhost to communicate with each other since they all operate in the same namespace. You can also configure pod-to-pod, pod-to-service and internet-to-service communications. Kubernetes presents several key advantages:

-

Easier Deployment Automation and Management

Kubernetes allows you to manage and automate deployments and container workloads/operations on-prem or in the cloud.

-

High Availability

Kubernetes enables traffic routing to containers and load balancing support to distribute traffic across pods.

-

Scalability

Kubernetes allows you to manage and automate deployments and container workloads/operations on-prem or in the cloud.

-

Visibility

Kubernetes provides cluster and pod monitoring methods.

What Limits Exist with Kubernetes?

While all of this sounds great, there are limits. Managing Kubernetes across multiple environments (e.g., multi-clouds, multiple continuous integration, delivery and deployment (CI/CD) pipelines) can be difficult. Security is another challenge. If a single Kubernetes cluster is compromised, vulnerabilities in other clusters may be exposed. Additionally, Kubernetes has some limits that are helpful to keep in mind when considering containerized networking:

- Application-Level Services: Message buses, data processing, databases, caches, cluster storage, and application-level services are not provided as built-in services.

- Alerting: Kubernetes does not define alerting, logging or monitoring solutions but there are methods for gathering and exporting metrics.

- Configuration: Kubernetes does not deliver or require a configuration system or language but offers an API that can work with various specifications.

- Management: Kubernetes does not provide any comprehensive configuration, maintenance, management or self-healing systems.

- Orchestration: Kubernetes is not only an orchestration system, but includes a set of independent, customizable control processes that manage current workflows toward a desired state. It does not require centralized control or execution of a defined workflow, which enables a system that is easier to use, extensible, robust, powerful and resilient.

- PaaS: While Kubernetes is not a conventional PaaS system, it does allow deployment, scaling, load balancing, logging, monitoring and alerting — all optional with plugins — to ensure flexibility and user choice as needed.

- Source Code: Kubernetes does not build applications or deploy source code, enabling flexibility in organizational technical requirements and preferences.

Like any solution, Kubernetes operates within defined limits, but can also solve many issues in containerized networking. Most importantly, it allows all containers running on a cluster to communicate with containers on that cluster. It also solves for internal and external microservices as well through load balancers, and DNS, CoreDNS and external DNS plugins.

Much more can be said about Kubernetes, but if you’d like additional information or assistance in planning and deploying traditional, virtualized, container or hybrid solutions on your network, visit www.Infoblox.com or contact your Infoblox Solution Architect or Account Manager today.