Introduction

In my previous blog, I walked through how to deploy anycast DNS with Infoblox Universal DDI™ on AWS using Cloud WAN. That design showed how enterprises can build a globally available and resilient DNS fabric by combining centralized Infoblox management with AWS’s global networking backbone.

As promised, in Part Two, we shift focus to Microsoft Azure. Many of the customers I work with operate hybrid or multi-cloud environments, and a common pattern I see is that the same DNS challenges experienced in AWS also appear in Azure. As they scale across multiple Azure regions, the same desires are present:

- Ensuring consistent DNS resolution everywhere

- Achieving automated resiliency and failover (i.e., without static routing workarounds)

- Keeping the overall design operationally simple and scalable

These needs become more pressing as organizations modernize applications and adopt distributed architectures. DNS sits at the core of network functionality, and anycast is a proven way to provide low-latency resolution and high availability globally.

This post covers how to deploy Infoblox NIOS-X and anycast DNS in Azure using Azure Virtual WAN as the backbone. The lab I built mirrors the AWS design but uses Azure’s native Azure Virtual WAN capabilities. This gives us clean multi-region routing, automatic route propagation, and built-in resilience, without additional networking constructs.

Why Azure Virtual WAN Is Key

Before diving into the setup, it’s important to highlight why Azure Virtual WAN is the backbone of this architecture. Traditional Azure hub-and-spoke designs rely on manual VNet peering or custom VPN/ExpressRoute links to interconnect regions. That works, but it quickly becomes complex at scale, especially when anycast routing is involved. Azure Virtual WAN provides a managed, policy-based global transit backbone that simplifies inter-region connectivity, routing, and scale. It eliminates the need for complex manual peering between VNets and hubs, giving enterprises a unified way to route traffic securely and efficiently across Azure regions. Here is a high-level overview of what is involved when configuring a Virtual WAN:

- Deploy a single Azure Virtual WAN as a global construct.

- Create regional virtual hubs under that Azure Virtual WAN.

- Microsoft automatically connects hubs using its global backbone.

- Route propagation between hubs happens natively.

- VNets attach to the closest hub, and route tables and labels control propagation in a structured way.

This model is ideal for anycast DNS. Each region can advertise the same anycast address through its local hub, and Azure Virtual WAN automatically distributes that route to all other hubs and VNets. No IPsec tunnels, no manual Border Gateway Protocol (BGP) route table updates, no hub peering maintenance. This is exactly the kind of global backbone you want for DNS.

Lab Topology

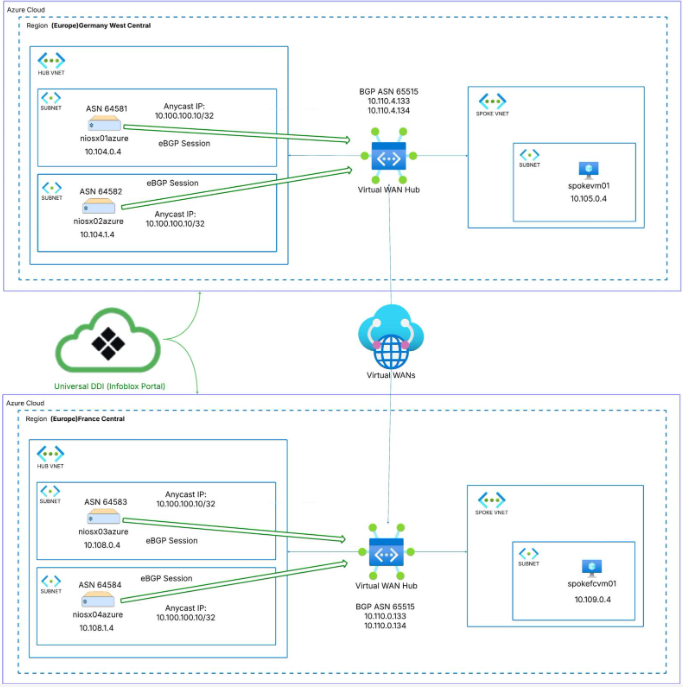

The lab consists of two regions:

- Germany West Central

- France Central

In each region I deployed:

- A Shared Services VNet hosting two NIOS-X appliances

- A spoke VNet with a test virtual machine (VM)

- A virtual hub as part of a single global Azure Virtual WAN

The Anycast IP is 10.100.100.10/32. Each pair of NIOS-X appliances advertises this address to its local hub. Because each region advertises the same anycast address, we rely on Azure Virtual WAN’s dynamic routing to ensure that traffic always flows to the closest NIOS-X instance.

Figure 1. High-level topology across Germany West Central and France Central interconnected by Azure Virtual WAN

Deploying Infoblox NIOS-X in Azure

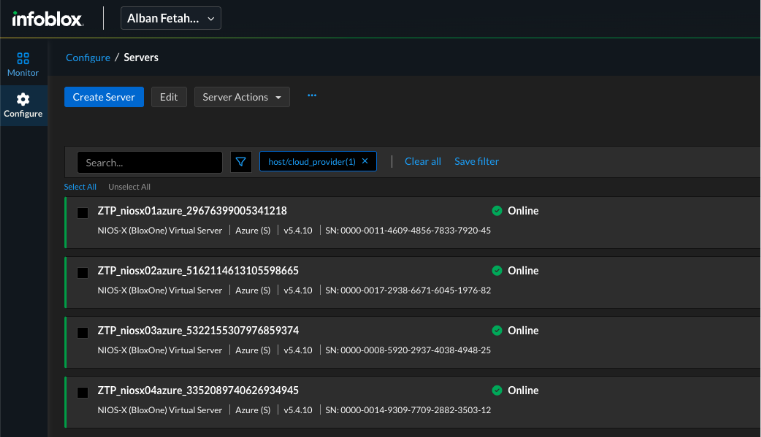

The foundation of this design is the deployment of Infoblox NIOS-X appliances in Shared Services VNets across multiple Azure regions. These appliances act as the DNS servers for the environment and are centrally managed through the Universal DDI portal. Deployment follows standard Azure VM workflows and uses Infoblox join tokens for seamless registration and management.

Deployment Steps:

- Deploy NIOS-X virtual machines in each target region within the Shared Services VNet using the appropriate Azure Marketplace image.

- Apply join tokens during initialization to ensure the appliances automatically register with the Universal DDI portal.

- Validate onboarding by confirming that the new NIOS-X instances appear under Configure → Servers in the Universal DDI portal.

Figure 2. Universal DDI portal showing NIOS-X appliances registered and online (Configure → Servers)

Why This Matters: This automated onboarding workflow eliminates manual registration steps and ensures new appliances are consistently visible in Universal DDI across regions, reducing deployment time and chances for human error.

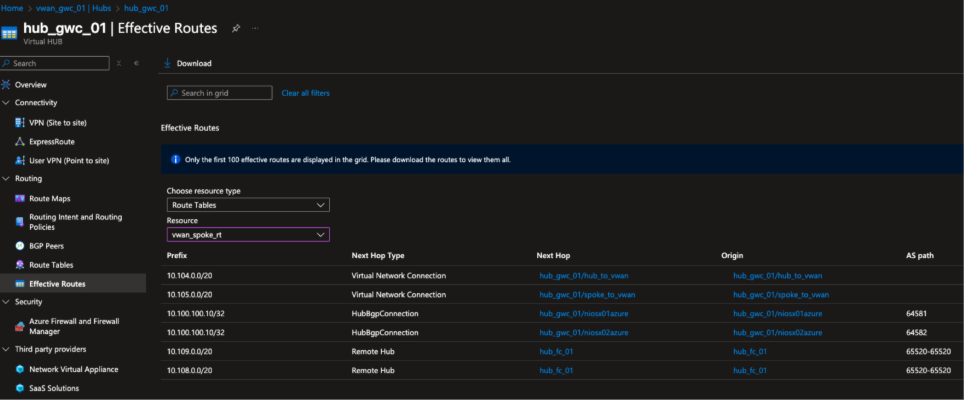

Azure Virtual WAN Hubs, VNet Attachments, and Route Tables

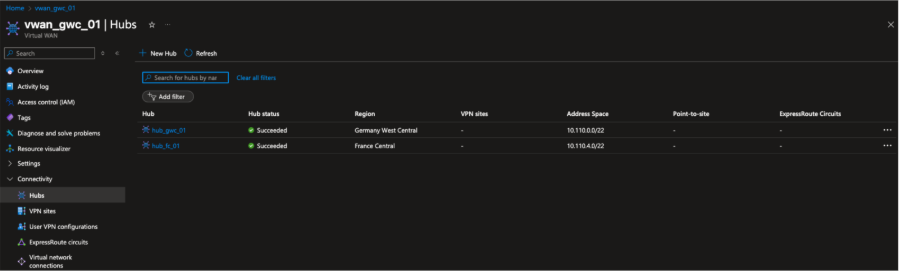

The backbone is a single Azure Virtual WAN with two hubs, one per region.

- Germany Hub: 10.110.0.0/22

- France Hub: 10.110.4.0/22

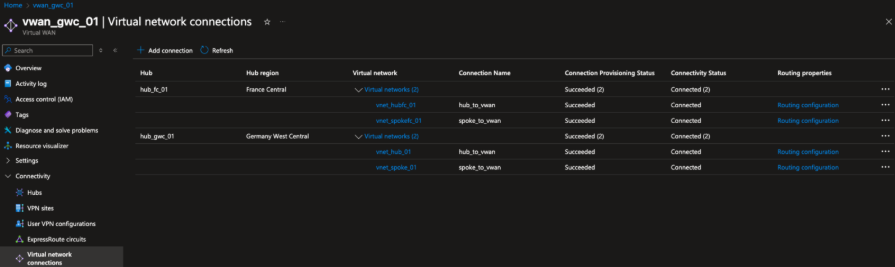

Each Shared Services VNet and spoke VNet is attached to the regional hub using Virtual Network Connections.

To keep routing structured, I used two route tables per hub:

- Default Route Table for hub and shared services connections

- Custom Spoke Route Table for application VNets

This separation gives us greater control over what routes are propagated to workloads and ensures anycast routes are only distributed where needed. Because Azure Virtual WAN automatically syncs hub routes across regions, it is not necessary to configure any manual peering between the German and French hubs. That’s a big operational win compared to traditional designs.

Figure 3. Azure Virtual WAN overview displaying the two regional hubs deployed under a single Azure Virtual WAN, one in Germany West Central and one in France Central. This global backbone is the foundation for inter-region connectivity and anycast route propagation.

Figure 4. Azure Virtual WAN configuration showing the Virtual Network connections established to the hubs in both Germany West Central and France Central regions. Each region has a Shared Services VNet and a spoke VNet connected to its respective hub.

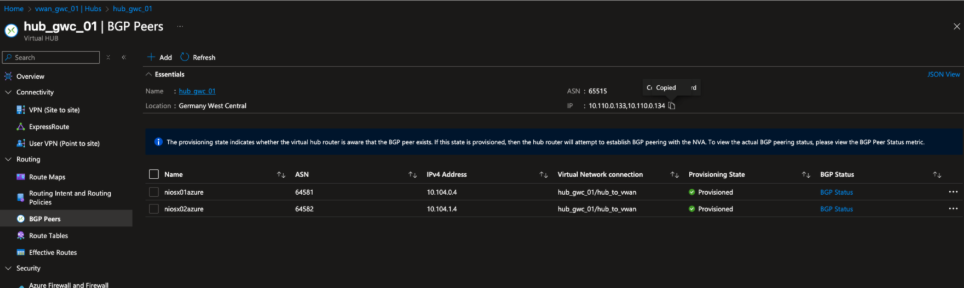

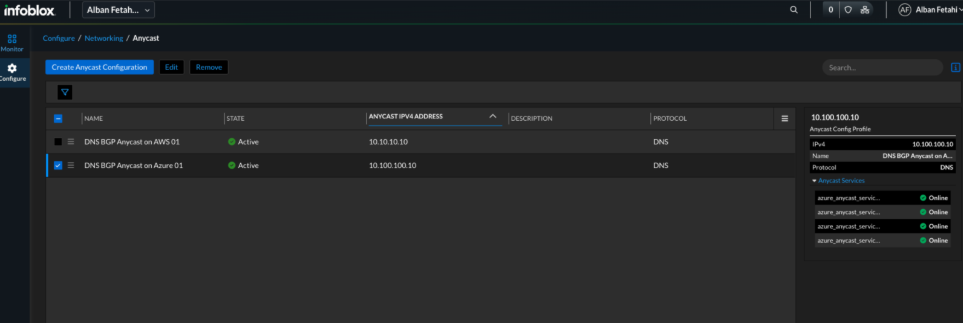

BGP Peering with Azure Virtual WAN Hubs

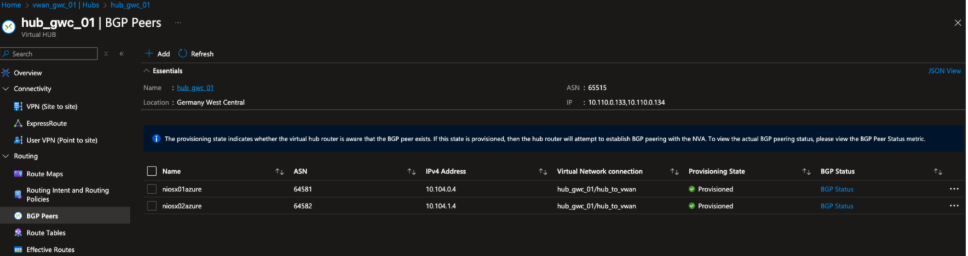

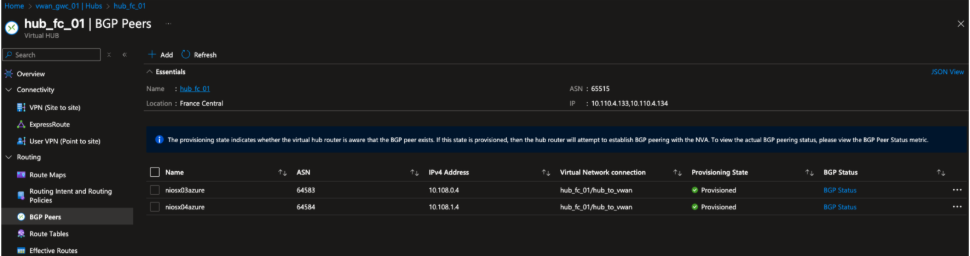

Azure Virtual WAN hubs have native BGP support. This simplifies the setup significantly. In each hub, I created two eBGP peerings, one for each NIOS-X appliance in the Shared Services VNet.

Example Germany Hub:

- Azure Virtual WAN Hub ASN: 65515, IP: 10.110.0.133 and 10.110.0.134

- NIOS-X1 ASN: 64581, IP: 10.104.0.4

- NIOS-X2 ASN: 64582, IP: 10.104.1.4

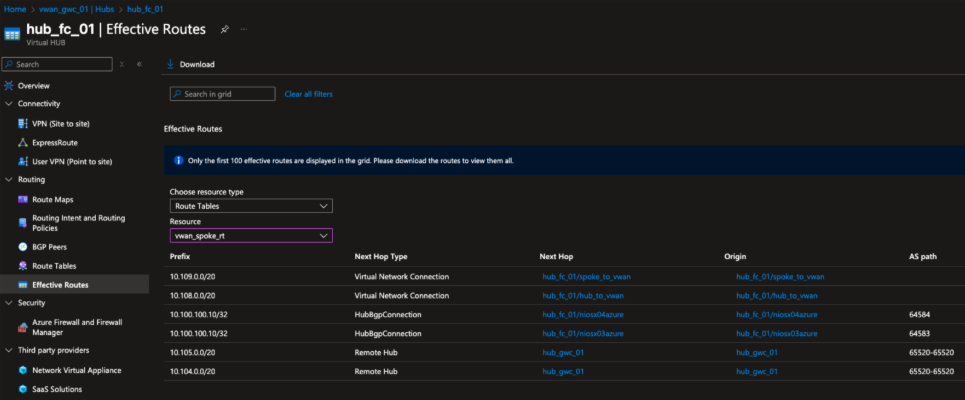

Once configured, the hub establishes a BGP session with both appliances. Each NIOS-X appliance advertises the 10.100.100.10/32 anycast prefix, which appears in the hub’s Effective Route table with the next hop type of HubBGPConnection. Azure Virtual WAN automatically propagates this route to the France hub and to all associated spoke route tables. Spokes use the custom spoke route table, which ensures they receive the anycast address and can resolve DNS using their closest hub.

Figure 5. BGP Peers view of the Germany West Central virtual hub displaying the two NIOS-X peers configured with unique ASNs. Both peers are in the Provisioned state, indicating that BGP sessions have been established, and anycast routes are being advertised into the hub.

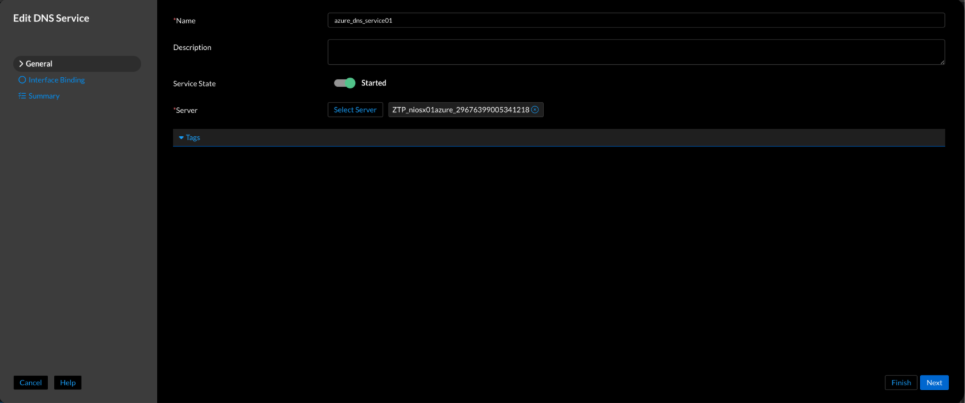

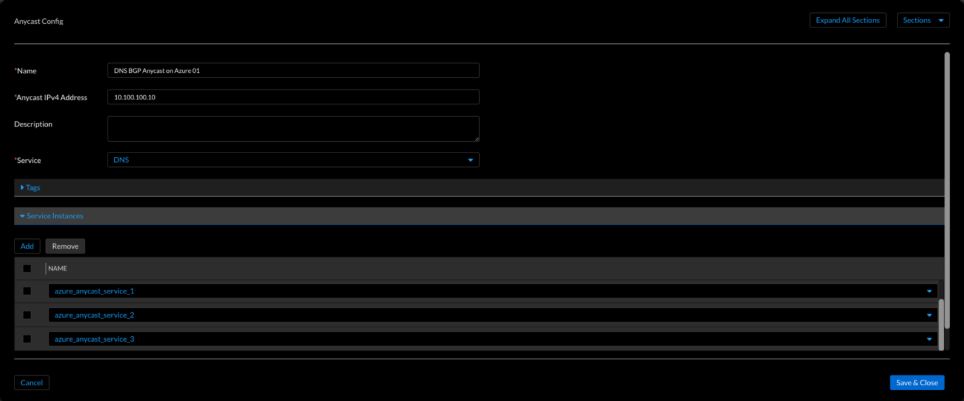

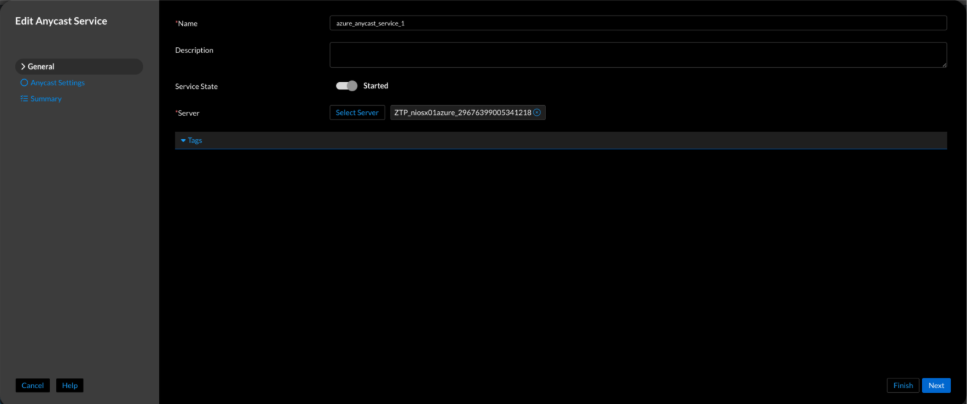

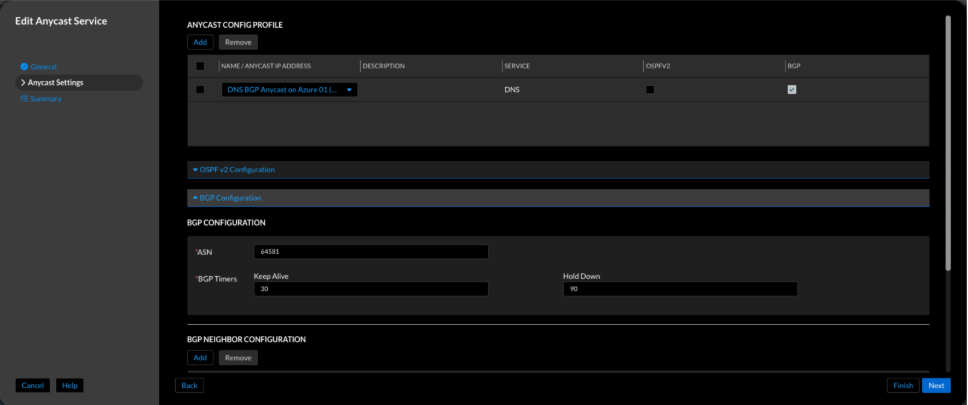

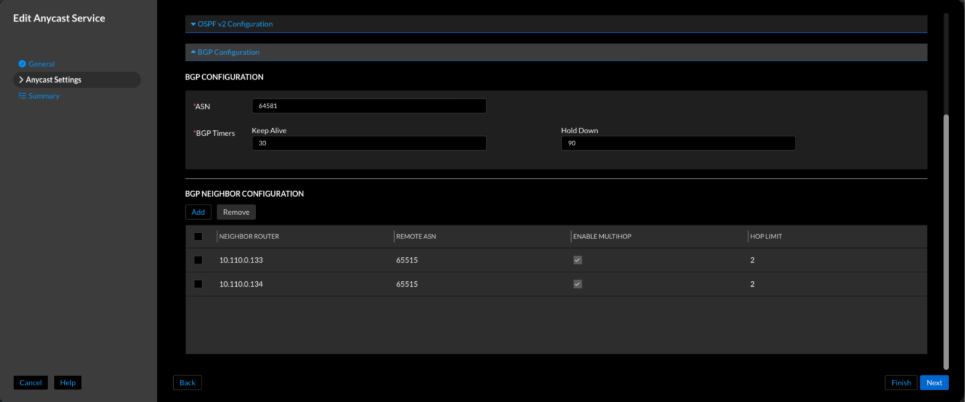

Configuring Anycast DNS in Infoblox

With connectivity in place, we enabled anycast DNS in Universal DDI.

These steps must be performed for each NIOS-X appliance in the Infoblox Portal:

- Enable DNS service under Configure → Service Deployment → Protocol Service.

- Create anycast configuration under Configure → Networking → Anycast.

- Create anycast service and associate it with DNS.

- Configure BGP peers to advertise the anycast route into Azure Virtual WAN backbone.

Figure 6. Universal DDI Enable DNS service (Configure → Service Deployment → Protocol Service → Create Service → DNS)

Figure 7. Universal DDI anycast configuration (Networking → Anycast → Create Anycast Configuration)

Figure 8. Universal DDI anycast service creation (Configure → Service Deployment → Protocol Service → Create Service → Anycast)

Figure 9. Universal DDI BGP peer configuration for anycast service

Figure 10. Universal DDI anycast IP shows green/active

Validation

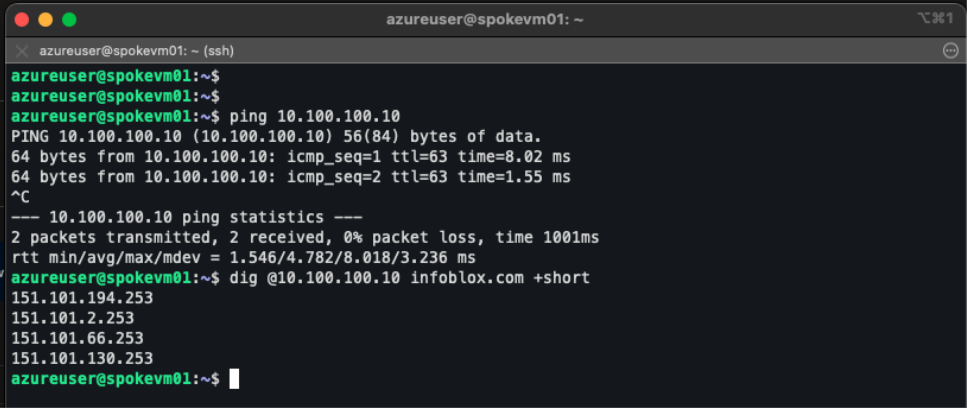

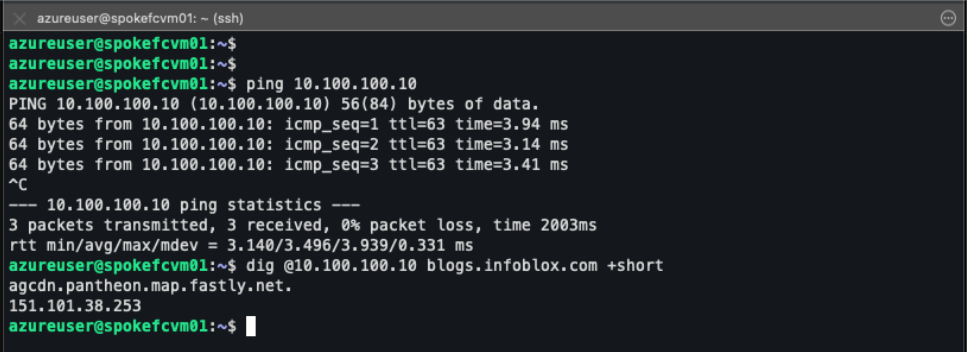

We validated the end-to-end design across both Azure regions to ensure anycast DNS works seamlessly over Azure Virtual WAN.

Checks performed:

- Routes learned from NIOS-X are visible in the Azure Virtual WAN route tables across regions.

- BGP sessions are established between NIOS-X appliances and the virtual hub routers.

- The anycast IP 10.100.100.10/32 is propagated across both regions through Azure Virtual WAN.

- DNS queries from spoke VNets successfully resolve via the anycast IP in both regions.

Figure 11. Effective routes in Germany West Central hub, spoke route table, showing anycast address 10.100.100.10/32 learned through BGP

Figure 12. Effective routes in France Central hub, spoke route table, showing anycast address 10.100.100.10/32 learned through BGP

Figure 13. BGP peering configuration in Germany West Central hub showing NIOS-X appliances peered with the Azure Virtual WAN hub router

Figure 14. BGP peering configuration in France Central hub showing NIOS-X appliances peered with the Azure Virtual WAN hub router

Figure 15. VM in Germany West Central spoke VNet, showing successful ping to anycast IP 10.100.100.10 and DNS resolution via anycast

Figure 16. VM in France Central spoke VNet, showing successful ping to anycast IP 10.100.100.10 and DNS resolution via anycast

Why This Matters: This validation confirms that anycast DNS operates seamlessly across regions, with routing handled by Azure Virtual WAN and resiliency provided by BGP.

Conclusion

This lab demonstrates how Infoblox Universal DDI and NIOS-X can be deployed in Azure to deliver a resilient anycast-based DNS fabric across multiple regions. By integrating with Azure Virtual WAN, enterprises can leverage a single, policy-driven backbone that makes DNS globally reachable, highly available, and simpler to operate at scale.

The result is more than just improved DNS resolution. It represents a modern, cloud-native network architecture that supports enterprise goals for agility, security, and global reach while preserving the proven design principles of traditional on-premises deployments.

Lab and Terraform Code

To help you get started quickly, I’ve published the complete Terraform project that builds the Azure Virtual WAN foundation used in this lab.

The repository includes all configurations required to deploy the multi-region hub-and-spoke topology, Virtual WAN hubs, route tables, and BGP connections.

GitHub Repository: Infoblox-UDDI-and-Azure-vWAN-Terraform-Lab

After deploying the infrastructure, you can follow the steps outlined in this blog to configure NIOS-X, enable DNS services, assign the anycast IP, and establish BGP sessions with the Azure Virtual WAN hubs.

This allows readers to easily replicate the full lab environment end-to-end and experiment with anycast DNS on Azure in their own subscriptions.

Disclaimer

The configurations and architecture demonstrated in this blog are based on lab-validated scenarios. They are intended for testing and educational purposes. Customers should review, test, and adapt configurations based on their own network design, redundancy, and operational requirements.